1. Introduction

Precise and unambiguous forest type identification is essential when evaluating forest ecological systems for environmental management practices. Forests are the largest land-based carbon pool accounting for about 85% of the total land vegetation biomass [

1]. Soil carbon stocks in the forest are as high as 73% of the global soil carbon [

2]. As the biggest biological resource reservoir, forests play an irreplaceable role in ecosystem management for climate change abatement, environmental improvement and ecological security [

3,

4,

5]. A common issue in climate change mitigation is to reduce forest damage and increase forest resources [

6], which can be measured by the precise estimation of carbon storage based on accurate mapping of forest types. Traditional forest survey methods involve random sampling and field investigation within each sample plot, a time-consuming and laborious process [

7,

8]. Remote sensing technology can be used to obtain forest information from areas with rough terrain or that are difficult to reach, complementing traditional methods while at the same time reducing the need for fieldwork. Therefore, it is necessary to explore the potential of multi-source remote sensing data to obtain explicit and detailed information of forest types.

Many remote sensing datasets have been explored to identify forest types and have obtained acceptable classification results. High-resolution images acquired from WordView-2 satellite sensors were used to map forest types in Burgenland, Austria, achieving promising results [

9]. However, high-resolution images are often expensive. Besides high-resolution images, Synthetic Aperture Radar (SAR) is also conducive to forest mapping, as the microwave energy generated by SAR satellite sensors can penetrate into forests and interact with different parts of trees, producing big volume scattering. Qin et al. [

10] demonstrated the potential of integrating PALSAR and MODIS images to map forests in large areas. In addition, topographic information has a strong correlation with the spatial distribution of forest types [

11]. Strahler et al. [

12] demonstrated that accuracies of species-specific forest type classification from Landsat imagery can be improved by 27% or more by adding topographic information. Other previous studies also showed that topographic information can improve the overall accuracy of forest type classification by more than 10% [

13,

14]. Topographic information can be represented by a Digital Elevation Model (DEM) or variables derived from a DEM [

13]. Beyond these datasets, Light Detection and Ranging (LiDAR) data have been used to identify forest type in combination with multispectral and hyperspectral images, because the multispectral and hyperspectral sensor can capture the subtle differences of leaf morphology among different forest types and the instrument of LiDAR can detect the different vertical structures of different forest types [

15]. These studies using high-resolution images, SAR data, DEM images or the combination of LiDAR and multispectral/hyperspectral images have proven their potential to classify forest types. However, the biggest limitations of these datasets are high cost and low temporal resolution. Therefore, it is necessary to explore the potential of free data to identify forest types, especially the potential for the configuration of multi-source free image data.

Most studies focused on forest type identification use remote sensing data obtained from a single or maybe two sensor types, and only a few studies concentrate on evaluating the ability of multi-source images acquired from three or more sensors to identify forest types [

16]. One existing study was carried out in [

17], which evaluated the potential of Sentinel-2A to classify seven forest types in Bavaria, Germany, based on object-oriented random forest classification and obtained approximately 66% overall accuracy. Ji et al. [

18] reported that the phenology-informed composite image was more effective for classifying forest types than a single scene image as the composite image captured within-scene variations in forest type phenology. Zhu et al. [

19] mapped forest types using dense seasonal Landsat time-series. The results showed that time-series data improved the accuracy of forest type mapping by about 12%, indicating that phenology information as contained in multi-seasonal images can discriminate between different forest types. However, the accuracy of the classification for seven major forest types was between 60% and 70%, thus a lower accuracy for forest type identification [

17,

20]. Therefore, imagery acquired from a single sensor may not be sufficient for forest-type mapping. Multi-sensor images might compensate for the information missing from a single sensor and improve the accuracy of classification [

7,

10,

21]. Meanwhile, forest type classification using multi-temporal imagery can also achieve better classification results than using single-period images [

7,

22,

23]. However, there are few studies on forest-type mapping based on the freely-available multi-source and time-series imagery.

Concerning classification algorithms, Object-Based Image Classification (OBIC) is very appealing for the purpose of land cover identification. OBIC can fuse multiple sources of data at different spatial resolutions [

24] and be used to further improve the result of classification [

25,

26]. Random Forest (RF) is a highly flexible ensemble learning method and has been getting more and more attention in the classification of remote sensing [

24,

27,

28,

29]. RF can effectively handle high-dimensional, very noisy and multi-source datasets without over-fitting [

30,

31] and achieve higher classification accuracy than other well-known classifiers, such as Support Vector Machines (SVM) and Maximum Likelihood (ML) [

28,

32], while the estimated importance of variables in the classification can be used to analyze the input features [

33]. Moreover, RF is a simple classifier for parameter settings and requires no sophisticated parameter tuning.

Based on the zero cost of Sentinel-2A, DEM, time-series Landsat-8 and Sentinel-1A imagery in our study, we aimed to evaluate the potential to map forest types by the random forest approach in Wuhan, China. We address the following four research questions: (1) which satellite imagery is more suitable for classifying forest types, the Sentinel-2A or Landsat-8 imagery? (2) How does the DEM contribute to the forest type identification? (3) Can the combined use of multi-temporal images acquired from Landsat-8, Sentinel-2A and DEM significantly improve the classification results? (4) Can the Sentinel-1A contribute to the improvement of classification accuracy?

3. Methods

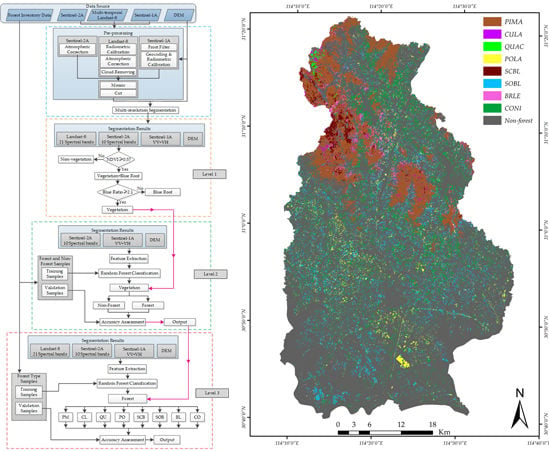

The proposed method, as illustrated in

Figure 4, encompasses four key components, namely imagery segmentation, vegetation extraction, forested area extraction and forest type identification. Image preprocessing (

Section 3.1) and multi-scale segmentation (

Section 3.2) were applied to produce image objects. The object-based Random Forest (RF) approach (

Section 3.3) was used for the hierarchical classification (

Section 3.4). Threshold analysis based on NDVI and Ratio Blue Index (RBI) was used to extract vegetation at Level-1 classification (

Section 3.4.1). Then, classification between forest and non-forest from the vegetation was finished at Level-2 classification (

Section 3.4.2), and the identification of eight forest types was accomplished at Level-3 (

Section 3.4.3). In the process of Level-2 and Level-3 classification, reference data were split into two parts for training and validation respectively, where 3/4 of sample data were randomly selected from each class as training data, while the rest for accuracy verification.

In this pipeline, operations in different stages are performed in sequence. A pre-processing and multi-scale segmentation are used for the multi-source images, generating the image objects as the basic input for the next operations. The vegetation is obtained in Level-1 by the NDVI and RBI threshold analysis. With the input vegetation, forested areas are extracted in the Level-2 state, and non-forest areas are removed. The map of forest types is accomplished in the Level-3 classification, producing eight dominant forest types.

3.1. Data Preprocessing

For the multispectral Sentinel-2A (S2) images, we performed atmospheric corrections with the SNAP 5.0 and Sen2Cor 2.12 software provided by the ESA. Two large scene Sentinel-2A images were mosaicked and then clipped, using the boundary of the study area. In this study, the bands with 60-m spatial resolution were not used, and other remaining bands with a spatial resolution of 10 m and 20 m were resampled to 10 m based on the nearest neighbor algorithm [

37]. The downloaded Landsat-8 images were processed by ENVI 5.3.1 as follows: we performed radiometric calibration and atmospheric correction by the FLAASH atmospheric model, and the cloud cover was removed using a cloud mask, built from the quality assessment band in the Landsat-8. We applied a mosaicking process to these images and a clipped operation with the boundary of the study area. Moreover, Landsat-8 images were co-registered to the Sentinel-2A images and resampled at the spatial resolution of the first seven bands to 10 m by [

37].

Sentinel-1A data were preprocessed in SARscape 5.2.1 using a frost filter to reduce the speckle noise. The backscatter values were radiometrically calibrated to eliminate terrain effects and georeferenced by converting the projection of the slant or ground distance to the geographic UTM/WGS84 coordinate system. To avoid the effects of small values, we transformed the result of calibration into a unit of dB as in:

where

N is the per-pixel value calculated by geocoding and radiometric calibration. Sentinel-1A images were co-registered to the Sentinel-2A images and resampled to a 10-m resolution by [

37], followed by a mosaicked process and clipped to the study area.

For the five SRTM1 DEM images in our study, the mosaicking and clipping operations on these images were firstly performed, then all DEM images were co-registered to the Sentinel-2A images and resampled to 10-m spatial resolution by [

37]. The slope and aspect features are derived from the clipped DEM.

3.2. Image Segmentation by MRS

Image classification is based on the analysis of image objects obtained by Multi-Resolution Segmentation (MRS), which is known as a bottom-up region-merging technique. These objects are generated by merging neighboring pixels or small segmented objects. The principle of merging is to ensure that the average heterogeneity between neighboring pixels or objects is the smallest and the homogeneity among the internal pixels of the object is the greatest [

38]. Our image segmentation is performed with the eCognition Developer 8.7. Four key parameters are used to adjust MRS: scale, the weight of color and shape, the weight of compactness and smoothness and the weights of input layers. The scale parameter is used to determine the size of the final image object, which corresponds to the allowed maximum heterogeneity when generating image objects [

39,

40]. The larger the scale parameter, the larger the size of the generated object, and vice versa. Homogeneity is used to represent the minimum heterogeneity and consists of two parts, namely color (spectrum) and shape. The sum of the weights for the color and shape is 1.0. The weights of color and shape can be set according to the characteristics of land cover types in the images. The shape is composed of smoothness and compactness. The former refers to the degree of smoothness for the edge of the segmented object, and the later refers to the degree of closeness of the entire object. The sum of the weights for the smoothness and compactness is also 1.0. The weights of input layers are used to set the weight of the band to participate in the segmentation. We can set the weights of input layers according to the requirements of the application.

In this study, the four 10-m resolution bands (Band 2, 3, 4, and 8) of Sentinel-2A were used as the MRS input layer to define objects, and a similar operation was applied to other images using the segmented boundary. An analysis of MRS was performed by different segmentation parameter sets, and visual assessment was done based on comparing the matching between the segmented objects and the reference polygons for the segmented results using the trial and error approach [

17], selecting 30, 0.1 and 0.5, for the scale, shape and compactness parameters, respectively.

3.3. Object-Based Random Forest

To handle a large number of input variables and the limited sample data, our classification in stages at Level-2 and Level-3 was accomplished by an object-based classification method: Random Forest (RF) [

31]. RF is an integrated learning method based on a decision tree, which is combined with many ensemble regression or classification trees [

29] and uses a bagging or bootstrap algorithm to build a large number of different training subsets [

41,

42]. Each decision tree gives a classification result for the samples not chosen as training samples. The decision tree “votes” for that class, and the final class is determined by the largest number of votes [

24]. This approach can handle thousands of input variables without variable deletion and is not susceptible to over-fitting as the anti-noising ability is enhanced by randomization. The weight of the variables as feature importance can be estimated [

42].

To estimate the importance in forest construction, internal unbiased error estimation uses Out-Of-Bag (OOB) sampling, in which objects from the training set are left out of the bootstrap samples and not used to construct the decision trees. There are two ways to calculate variable importance: the Gini coefficient method and the permutation variable importance approach [

31]. The former is based on the principle of impurity reduction, while the latter, selected in our RF analysis, is based on random selection and index reordering. The permutation variable importance method was carried out to measure variable important. First of all, the accuracy of prediction in the OOB observations are calculated after growing a tree, then the accuracy of prediction is computed after the association between the variable of interest, and the corresponding output is destroyed by permuting the values of all individuals for the variable of interest. The importance of the variable of interest from a single tree is calculated by subtraction of the two accuracy values. The final importance for the variable of interest is obtained by calculating the average value of all tree importance values in random forest. The same procedure is carried out for all other variables.

In this study, the random forest approach including the estimation of OOB was programmed with OpenCV2.4.10. Three parameters must be set: the number of decision trees (NTree), the number of variables for each node (SubVar) and the maximum depth of a tree (MaxDepth). We set NTree to 20, MaxDepth to 6 and SubVar to one-quarter of the total number of input variables for all tested scenarios.

3.4. Object-Based Hierarchical Classification

The forest type mapping was obtained through a hierarchical strategy, including vegetation classification at the first level, forestland extraction at the second layer and forest type classification at the third level.

3.4.1. Vegetation Classification at Level-1

The first level of classification is to extract vegetation from land cover and contains two steps. The first step is vegetation and blue roof extraction using the Normalized Difference Vegetation Index (NDVI) threshold analysis and then removal of blue roofs misclassified as vegetation by the Ratio Blue Index (RBI) threshold analysis. NDVI has been ubiquitously used for vegetation extraction in global land processes [

43,

44]. In this study, it was calculated from near-infrared and red bands using the 10-m spatial resolution of Sentinel-2A, written as:

where

and

are the reflectance from the near-infrared and red bands, respectively.

The NDVI threshold analysis is performed by the stepwise approximation method [

20]. The principle of this method is first to fix an initial threshold in the NDVI histogram and then determine the optimal threshold when the image objects of vegetation are best matched by visual inspection. However, there exists an overlap in NDVI values between blue building roofs and vegetation, which means that some blue roofs can be misclassified as vegetation. Compared to the vegetation, the mean reflection value of the blue roof is higher in the visible light bands, while being lower in the near-infrared band [

45]. To distinguish blue roofs and vegetation, a Ratio Blue Index (RBI) threshold analysis based on the stepwise approximation is further carried out, consistent with the process of vegetation extraction. RBI is the band ratio of the near-infrared to blue roofs, defined by:

where

and

are the reflectance of the near-infrared and blue bands, respectively. In this study, we set the threshold of NDVI to 0.57 for non-vegetation removal, and RBI is 2.1 for blue roof removal, leading the pure vegetation to further classification at Level-2.

3.4.2. Forestland Classification at Level-2

The second hierarchical (Level-2) classification is to extract forest and non-forest objects from the acquired vegetation at Level-1 using the RF approach. RF classification was carried out to evaluate the relative contributions of S2, S1, DEM and L8 on forestry land extraction by four experiments. To accomplish this process, texture features, spectral features and topographic features were firstly extracted from the segmented objects. The spectral features are comprised of land cover surface reflectance and vegetation indices acquired from the combination of visible and near-infrared spectral bands, as well as a simple, effective and empirical measure of surface vegetation conditions. Texture features are calculated by texture filters based on co-occurrence matrix. This matrix is a function of both the angular relationship and distance between two neighboring pixels and shows the number of occurrences of the relationship between a pixel and its specified neighbor [

46,

47]. These filters include mean, variance, homogeneity, contrast, dissimilarity, entropy, second moment and correlation. To reduce high dimensional and redundant texture features, Principal Component Analysis (PCA) is carried out to achieve a more accurate classification result by [

48]. As shown in

Table 6, a total of 36 features derived from Sentinel-2A and DEM imagery was chosen to extract the forested areas, including 21 spectral features, 12 textural features and three topographic features.

As presented in

Table 6, the spectral features include the mean value of each band for Sentinel-2A and vegetation indices. In this study, we extract the texture features using the 10-m spatial resolution bands of the Sentinel-2A images (Sb2, Sb3, Sb4 and Sb8) and obtain three principal components based on PCA, which can explain 95.02% of our texture features. Topographic features are represented by a DEM, aspect and slope, and the features of aspect and slope are extracted from the DEM. RF begins with these 36 features acquired from the S2 and DEM imagery and outputs the forested areas as input for the Level-3 classification.

3.4.3. Forest Type Classification at Level-3

The main object of Level-3 classification is to classify forested area into more detailed forest types, which are combined with the four single-dominant forests and the four mixed forest types, according to the actual distribution in our study area. Among these species, CONI can be more easily distinguished than the other three mixed forest types due to the significant spectral differences between them. For the type of coniferous (e.g., PIMA and CULA) or the mixed-broadleaf forest (e.g., QUAC and POLA), it is more difficult to identify these species from a single dataset due to the high similarity between spectrums. Therefore, other features such as topographic and phenology information must be collected to improve classification results. In this study, a multi-source dataset (S1, S2, DEM and L8) was obtained from four kinds of sensors. As shown in

Table 7, the 59 features derived from these multi-source images, including 21 spectral features from S2, three topographic features derived from DEM, two backscattering features obtained from S1 and 33 spectral features from L8 time-series images, were used to find the best configuration for classifying eight forest types.

As shown in

Table 7, the 21 spectral features extracted from S2 images are consist with the average spectral values of each band and vegetation indexes, which are a combination of visible and near-infrared bands, like the Normalized Difference Vegetation Index (NDVI), Ratio Vegetation Index (RVI), Enhanced Vegetation Index (EVI) and Difference Vegetation Index (DVI). The three topographic features are DEM, aspect and slope; in particular, aspect and slope are calculated from the DEM. The two backscattering features (VV, VH) obtained from Sentinel-1A are also used in forest type mapping. The 33 spectral features derived from the multi-temporal L8 images are chosen to capture temporal information, indicating physiological growth and phonological changes. Forest type identification was accomplished by RF using the combination of these features, and the accuracy was significantly improved with the added temporal information from L8 imagery.

5. Discussion

This study demonstrates that freely-accessible multi-source imagery (Sentinel-1A, Sentinel-2A, DEM and multi-temporal Landsat-8) could significantly improve the overall accuracy of forest type identification by comparative analysis of multiple scenario experiments. We obtained a more accurate mapping result of forest type than the previous studies using Sentinel-2A or similar free satellite images. Immizer et al. [

17] mapped forest types using Sentinel-2A data based on the object-oriented RF algorithm in Central Europe with an overall accuracy of 66.2%. Dorren et al. [

13] combined Landsat TM and DEM images to identify forest types in the Montafon by an MLC classifier and obtained an overall accuracy of 73%. In addition, the number of identified forest types in this study, especially the mixed forest types, was more than previous studies [

17,

20]. Compared with the Landsat-8 imagery in Scenario 2, the overall accuracy can be improved by 4.31% when using only the Sentinel-2A imagery, which means that the Sentinel-2A imagery was more helpful to map forest types. The slop feature (

Figure 7) derived from the DEM data contributed the most to classifying forest types, indicating that the spatial distribution of forest types is greatly influenced by topographic information. In addition, the Sentinel-1A images also have a contribution to the classification accuracy with an approximately 2.65% improvement (Scenario 5 in

Table 9).

Regarding the employed multi-source imagery, these data consistently improved the overall accuracy of forest type identification. The time-series Landsat-8 and Sentinel-2A data, covering the crucial growing and leaf-off seasons of the forest, could provide phenological information to improve the discrimination between different forest types. This result is in good agreement with the findings of [

19,

50], who summarized that time-series images can obtain much higher accuracy of mapping tropical forest types. Macander et al. [

53] have also used seasonal composite Landsat imagery and the random forest method for plant type cover mapping and found that multi-temporal imagery improved cover classification. Topographic information derived from the DEM can better offer different geomorphologic characteristics. These characteristics reflect the habitats of different forest types and can help to identify forest types, similar to the studies established by [

13,

50]. Seen from the experimental results of forest type identification by using only Landsat-8 and Sentinel-2A imagery, we concluded that Sentinel-2A images can achieve a higher overall accuracy due to its high spatial resolution (

Table 9 and

Table 10). This result is the same as [

50], who concluded that the classification using Sentinel-2 images performed better than Landsat-8 data.

Regarding the strategy of hierarchical classification based on object-oriented RF, it can better and more flexibly combine data from multiple sources and simplify the complex classification problem into a number of simpler classifications. Zhu et al. [

19] successfully mapped forest types based on two-level hierarchical classification using dense seasonal Landsat time-series images in Vinton County, Ohio. In our study, three-level hierarchical classification was carried out to map forest types. First, we extracted vegetation from land-cover utilizing two threshold analyses based on NDVI and RBI. Owing to RVI threshold analysis, we can well remove blue roofs misclassified as vegetation; because RVI improves the discrimination between forest types based on the different spectral characteristics in blue and near-infrared bands. For the second-level classification, Sentinel-2A and DEM images are enough to identify forestland and non-forest land. In the third-level classification, we fused information from images of DEM, time-series L8, S2 and S1 to better use the forest type structure habits and phenology. If we do not use hierarchical classification, combining all multi-source data to identify all classes will be time consuming. In addition, we can tune the parameters of RF separately to obtain optimal results from each classification level using the strategy of hierarchical classification. For the hierarchical approach, the RF classifier can assess the importance of features for the class of interest rather than all classes.

Regarding the classification algorithm and classifier performance, it was observed that the object-based Random Forest (RF) method was incredibly helpful to identify forest types in our tested scenarios. In the process of hierarchical classification, RF uses a subset of features to build each individual hierarchy, making a more accurate classification decision based on effective features and reducing the error associated with the structural risk of the entire feature vector. Novack et al. [

54] showed that RF classifier can evaluate each attribute internally; thus, it is less sensitive to the increase of variables (

Table 5 and

Table 6). The object-based classifier can provide faster and better results and can be easily applied to classify forest types [

24,

39,

40,

55]. In addition, this classification method has the ability to handle predictor variables with a multimodal distribution well due to the high variability in time and space [

50,

56]; especially, no sophisticated parameter tuning is required. Thus, it could obtain a higher overall accuracy.

Regarding the feature importance for the highest accuracy of forest type classification (

Figure 7), the input features were evaluated by the substitution method [

33], and the feature distribution from the highest classification accuracy of forest types is consistent with some previous studies [

51,

57,

58]. The slope and phenological features with higher quantization scores were the two most pivotal features for forest type identification. The classification accuracy listed in

Table 8 was significantly improved by combining phenology information from multi-temporal L8 images and Sentinel-2A imagery, and the overall accuracy was raised at least 22.51% (Scenario 7); this result is in line with the research of [

18,

53], who reported that the multi-temporal imagery was more effective for plant type cover identification. The feature vegetation index in the bands of red and near-infrared from the Sentinel-2A also contributes to forest type classification, and other features abstracted from Sentinel-2A, DEM and multi-temporal Landsat-8 images (e.g., L8_June b6, L8_March b4, etc.) are listed as important. The removal of any feature can lead to a reduction in the overall accuracy, emphasizing the importance of topographic and phenology information for forest type identification. In addition, the backscattering features obtained from VV images of Sentinel-1A had a nearly 2.65% improvement for forest type classification between Scenario 4 and 5 (

Table 8), which demonstrates that VV polarization radar images can help to improve classification accuracy, while using VV and VH features from Sentinel-1 data in Scenario 6 (

Table 8) was less successful as the overall accuracy decreased by 1.32% compared to Scenario 5, which means that VV polarization imagery was found to better discriminate forest types than VH polarization data. The quantitative scores of features described in

Figure 7 indicate that the 43 features extracted from the multi-source remote sensing data can be applied to map the forest types.

This study suggests that freely-available multiple-source remotely-sensed data have the potential of forest type identification and offer a new choice to support monitoring and management of forest ecological resources without the need for commercial satellite imagery. Experimental results for eight forest types show that the proposed method achieves high classification accuracy. However, there still was misclassification between the forest types with similar spectral characteristics during a phonological period. As can be seen from the assessment report in

Table 9, the incorrect classification between BRLE and PIMA, CULA and PIMA was always caused by the exceedingly high spectral similarity, especially in the old growth forests where shadowing, tree health and bidirectional reflectance exist [

51,

59]. Hence, some future improvements will include obtaining more accurate phenological information from Sentinel-2 and using 3D data like the free laser scanning data [

60,

61,

62,

63]. In general, the proposed method has the potential for mapping forest types due to the zero cost of all required remotely-sensed data.

6. Conclusions

In this study, we used freely-accessible multi-source remotely-sensed images to classify eight forest types in Wuhan, China. A hierarchical classification approach based on the object-oriented RF algorithm was conducted to identify forest types using the Sentinel-1A, Sentinel-2A, DEM and multi-temporal Landsat-8 images. The eight forest types are four single-dominant forest (PIMA, CULA, QUAC and POLA) and four mixed forest types (SCBL, SOBL, BRLE and CONI), and the samples of forest types were manually delineated using forest inventory data. To improve the discrimination between different forest types, phenological information and topographic information were used in the hierarchical classification. The final forest type map was obtained through a hierarchical strategy and achieved an overall accuracy of 82.78%, demonstrating that the configuration of multiple sources of remotely-sensed data has the potential to map forest types at regional or global scales.

Although the spatial resolution of the multispectral images is not exceedingly high, the increased spectral resolution can make up for the deficiency of the spatial resolution, especially for Sentinel-2A images. The most satisfying results obtained from the combination of multi-source images are comparable to previous studies using high-spatial resolution images [

64,

65]. These free multiple-source remotely-sensed images (Sentinel-2A, DEM, Landsat-8 and Sentinel-1A) provide a feasible alternative to forest type identification. Given the limited availability of Sentinel-2A data for this study, the multi-temporal Landsat-8 data were used to extract phenology information. In the future, the two twin Sentinel-2 satellites will provide free and dense time-series images with a five-day global revisit cycle, which offers the greatest potential for further improvement in the forest type identification. In general, the configuration of Sentinel-2A, DEM, Landsat-8 and Sentinel-1A has the potential to identify forest types on a regional or global scale.