Infrared Small Target Detection via Non-Convex Rank Approximation Minimization Joint l2,1 Norm

Abstract

:1. Introduction

2. Related Works

2.1. Methods of Background-Based Assumption

2.2. Methods of Target-Based Assumption

2.3. Methods of Target and Background Separating

- (1).

- We developed a novel infrared small target detection method based on non-convex rank approximation minimization and the weighted l1 norm, which can approximate rank function and l0 norm better, leading to a better ability to separate the target from the background than the state-of-the-art methods including IPI, NIPPS, ReWIPI, SMSL, and RIPT.

- (2).

- Considering that most of the existing approaches suffer from residual strong edges, we introduced an additional regularization term on the remaining edges utilizing the l2,1 norm because of the linearly structured sparsity of most interference sources.

- (3).

- An optimization algorithm based on ADMM with DC programming was presented to solve the proposed model. Since the constraints are more powerful than the IPI-based methods, the proposed algorithm converged faster.

3. Proposed Method

3.1. The Surrogate of Rank

3.2. The Surrogate of Sparsity

3.3. Solution of the NRAM Model

| Algorithm 1: ALM/DC solver to the NRAM model |

| Input: Original patch-image D, λ, β, μ0, γ; Output: , ; Initialize: , , , , , ; While not converged do 1: Fix the others and update B by DC programming; 2: Fix the others and update T by ; 3: Fix the others and update E by ; 4: Fix the others and update Y by ; 5: Update W by ; 6: Update μ by ; 7: Check the convergence conditions ; 8: Update k; ; End while |

| Algorithm 2: DC programming |

| Input:, , , ; Output:, ; Initialize:, ; While not converged do 1: Calculate , ; 2: Check the convergence conditions ; End while 3: ; |

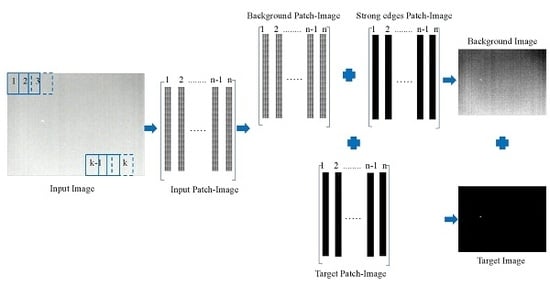

3.4. The Whole Process of the Proposed Method

- (1).

- Patch-image construction. By sliding a window of size from top to bottom and from left to right to transform the original infrared image into a patch-image , where t is the number of window slips, the matrix inside each matrix was vectorized as a column of the constructed patch-image D.

- (2).

- Target-background separation. The input patch-image D was decomposed into a low-rank matrix B, a sparse matrix T and a structural noise (strong edges) matrix E. Since the structural noise belongs to the background, we summed B and B as the final recovered background patch-image B.

- (3).

- Image reconstruction and target detection. Reconstruction is the inverse process of construction. For the position overlapped by several patches, we utilized the one-dimensional median filter to determine the value. Small targets were detected by adaptive threshold segmentation; the selection of the threshold was based on [21].

4. Experiment and Analysis

4.1. Experimental Preparation

4.2. Evaluation Metrics

4.3. Parameter Analysis

4.3.1. Penalty Factor

4.3.2. Norm Factor

4.3.3. Compromising Constant C

4.4. Qualitative Evaluation under Different Scenes

4.5. Quantitative Evaluation of Sequences

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Luo, J.H.; Ji, H.B.; Liu, J. An algorithm based on spatial filter for infrared small target detection and its application to an all directional IRST system. Proc. SPIE 2007, 6279. [Google Scholar] [CrossRef]

- Tonissen, S.M.; Evans, R.J. Performance of dynamic programming techniques for Track-Before-Detect. IEEE Trans. Aerosp. Electron. Syst. 1996, 32, 1440–1451. [Google Scholar] [CrossRef]

- Reed, I.S.; Gagliardi, R.M.; Stotts, L.B.J.I.T.o.A.; Systems, E. Optical moving target detection with 3-D matched filtering. IEEE Trans. Aerosp. Electron. Syst. 1988, 24, 327–336. [Google Scholar] [CrossRef]

- Zhang, H.Y.; Bai, J.J.; Li, Z.J.; Liu, Y.; Liu, K.H. Scale invariant SURF detector and automatic clustering segmentation for infrared small targets detection. Infrared Phys. Technol. 2017, 83, 7–16. [Google Scholar] [CrossRef]

- He, Y.-J.; Li, M.; Zhang, J.; Yao, J.-P. Infrared target tracking via weighted correlation filter. Infrared Phys. Technol. 2015, 73, 103–114. [Google Scholar] [CrossRef]

- Wang, W.; Li, C.; Shi, J. A robust infrared dim target detection method based on template filtering and saliency extraction. Infrared Phys. Technol. 2015, 73, 19–28. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, L.; Yuan, D.; Chen, H. Infrared small target detection based on local intensity and gradient properties. Infrared Phys. Technol. 2018, 89, 88–96. [Google Scholar] [CrossRef]

- Wang, X.Y.; Peng, Z.M.; Zhang, P.; He, Y.M. Infrared Small Target Detection via Nonnegativity-Constrained Variational Mode Decomposition. IEEE Geosci, Remote Sens. 2017, 14, 1700–1704. [Google Scholar] [CrossRef]

- Tom, V.T.; Peli, T.; Leung, M.; Bondaryk, J.E. Morphology-based algorithm for point target detection in infrared backgrounds. In Proceedings of the Signal and Data Processing of Small Targets, Orlando, FL, USA, 22 October 1993; pp. 2–12. [Google Scholar]

- Deshpande, S.D.; Er, M.H.; Venkateswarlu, R.; Chan, P. Max-mean and max-median filters for detection of small targets. In Proceedings of the Signal and Data Processing of Small Targets, Denver, CO, USA, 4 October 1999; pp. 74–84. [Google Scholar]

- Kim, S.; Yang, Y.Y.; Lee, J.; Park, Y. Small Target Detection Utilizing Robust Methods of the Human Visual System for IRST. J. Infrared Millim. Terahertz Wave 2009, 30, 994–1011. [Google Scholar] [CrossRef]

- Shao, X.P.; Fan, H.; Lu, G.X.; Xu, J. An improved infrared dim and small target detection algorithm based on the contrast mechanism of human visual system. Infrared Phys. Technol. 2012, 55, 403–408. [Google Scholar] [CrossRef]

- Wang, X.; Lv, G.F.; Xu, L.Z. Infrared dim target detection based on visual attention. Infrared Phys. Technol. 2012, 55, 513–521. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Li, H.; Wei, Y.T.; Xia, T.; Tang, Y.Y. A Local Contrast Method for Small Infrared Target Detection. IEEE Trans. Geosci. Remote Sens. 2014, 52, 574–581. [Google Scholar] [CrossRef]

- Han, J.H.; Ma, Y.; Zhou, B.; Fan, F.; Liang, K.; Fang, Y. A Robust Infrared Small Target Detection Algorithm Based on Human Visual System. IEEE Trans. Geosci. Remote Sens. 2014, 11, 2168–2172. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B. Effective Infrared Small Target Detection Utilizing a Novel Local Contrast Method. IEEE Trans. Geosci. Remote Sens. 2016, 13, 1890–1894. [Google Scholar] [CrossRef]

- Han, J.H.; Liang, K.; Zhou, B.; Zhu, X.Y.; Zhao, J.; Zhao, L.L. Infrared Small Target Detection Utilizing the Multiscale Relative Local Contrast Measure. IEEE Trans. Geosci. Remote Sens. 2018, 15, 612–616. [Google Scholar] [CrossRef]

- Wei, Y.T.; You, X.G.; Li, H. Multiscale patch-based contrast measure for small infrared target detection. Pattern Recogn. 2016, 58, 216–226. [Google Scholar] [CrossRef]

- Li, J.; Duan, L.Y.; Chen, X.W.; Huang, T.J.; Tian, Y.H. Finding the Secret of Image Saliency in the Frequency Domain. IEEE Trans. Pattern Anal. 2015, 37, 2428–2440. [Google Scholar] [CrossRef] [PubMed]

- Tang, W.; Zheng, Y.B.; Lu, R.T.; Huang, X.S. A Novel Infrared Dim Small Target Detection Algorithm based on Frequency Domain Saliency. In Proceedings of the 2016 IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC 2016), Xi’an, China, 3–5 October 2016; pp. 1053–1057. [Google Scholar]

- Gao, C.Q.; Meng, D.Y.; Yang, Y.; Wang, Y.T.; Zhou, X.F.; Hauptmann, A.G. Infrared Patch-Image Model for Small Target Detection in a Single Image. IEEE Trans. Image Process. 2013, 22, 4996–5009. [Google Scholar] [CrossRef] [PubMed]

- Ganesh, A.; Lin, Z.C.; Wright, J.; Wu, L.Q.; Chen, M.M.; Ma, Y. Fast Algorithms for Recovering a Corrupted Low-Rank Matrix. In Proceedings of the 2009 3rd IEEE International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (Camsap 2009), Aruba, Dutch Antilles, The Netherlands, 13–16 December 2009; pp. 213–216. [Google Scholar]

- Kim, H.; Choe, Y. Background Subtraction via Truncated Nuclear Norm Minimization. In Proceedings of the 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Kuala Lumpur, Malaysia, 12–15 December 2017; pp. 447–451. [Google Scholar]

- Gu, S.H.; Zhang, L.; Zuo, W.M.; Feng, X.C. Weighted Nuclear Norm Minimization with Application to Image Denoising. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 2862–2869. [Google Scholar] [CrossRef]

- Hu, Y.; Zhang, D.B.; Ye, J.P.; Li, X.L.; He, X.F. Fast and Accurate Matrix Completion via Truncated Nuclear Norm Regularization. IEEE Trans. Pattern Anal. 2013, 35, 2117–2130. [Google Scholar] [CrossRef] [PubMed]

- Sun, Q.; Xiang, S.; Ye, J. Robust principal component analysis via capped norms. In Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Chicago, IL, USA, 11–14 August 2013; pp. 311–319. [Google Scholar]

- Nie, F.; Huang, H.; Ding, C.H. Low-rank matrix recovery via efficient schatten p-norm minimization. In Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012; pp. 655–661. [Google Scholar]

- Guo, J.; Wu, Y.Q.; Dai, Y.M. Small target detection based on reweighted infrared patch-image model. IET Image Process. 2018, 12, 70–79. [Google Scholar] [CrossRef]

- Zhang, T. Analysis of Multi-stage Convex Relaxation for Sparse Regularization. J. Mach. Learn. Res. 2010, 11, 1081–1107. [Google Scholar]

- He, Y.J.; Li, M.; Zhang, J.L.; An, Q. Small infrared target detection based on low-rank and sparse representation. Infrared Phys. Technol. 2015, 68, 98–109. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J.J.F.; Learning, T.i.M. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends Mach. Learn. 2011, 3, 1–122. [Google Scholar] [CrossRef]

- Lin, Z.; Chen, M.; Ma, Y. The Augmented Lagrange Multiplier Method for Exact Recovery of Corrupted Low-Rank Matrices. ArXiv 2010, arXiv:1009.5055. [Google Scholar]

- Gu, S.H.; Xie, Q.; Meng, D.Y.; Zuo, W.M.; Feng, X.C.; Zhang, L. Weighted Nuclear Norm Minimization and Its Applications to Low Level Vision. Int. J. Comput. Vis. 2017, 121, 183–208. [Google Scholar] [CrossRef]

- Xue, S.; Qiu, W.; Liu, F.; Jin, X. Low-Rank Tensor Completion by Truncated Nuclear Norm Regularization. ArXiv 2017, arXiv:1712.00704. [Google Scholar]

- Cao, W.F.; Wang, Y.; Sun, J.; Meng, D.Y.; Yang, C.; Cichocki, A.; Xu, Z.B. Total Variation Regularized Tensor RPCA for Background Subtraction From Compressive Measurements. IEEE Trans. Image Process. 2016, 25, 4075–4090. [Google Scholar] [CrossRef] [PubMed]

- Tao, P.D.; An, L.T.H.J.A.M.V. Convex analysis approach to dc programming: Theory, algorithms and applications. Acta Math. Vietnam. 1997, 22, 289–355. [Google Scholar]

- Hadhoud, M.M.; Thomas, D.W.J.I.T.o.C.; Systems. The two-dimensional adaptive LMS (TDLMS) algorithm. IEEE Trans. Circuits Syst. 1988, 35, 485–494. [Google Scholar] [CrossRef]

- Zhao, Y.; Pan, H.; Du, C.; Peng, Y.; Zheng, Y. Bilateral two-dimensional least mean square filter for infrared small target detection. Infrared Phys. Technol. 2014, 65, 17–23. [Google Scholar] [CrossRef]

- Bae, T.W.; Zhang, F.; Kweon, I.S. Edge directional 2D LMS filter for infrared small target detection (vol 55, pg 137, 2012). Infrared Phys. Technol. 2012, 55, 220. [Google Scholar] [CrossRef]

- Bae, T.W.; Kim, Y.C.; Ahn, S.H.; Sohng, K.I. A novel Two-Dimensional LMS (TDLMS) using sub-sampling mask and step-size index for small target detection. IEICE Electron. Express 2010, 7, 112–117. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.J.; Zhang, H.Y.; Bai, J.J.; Zhou, Z.J.; Zheng, H.H. A Speeded-up Saliency Region-based Contrast Detection Method for Small Targets. SPIE Proc. 2018, 10615. [Google Scholar] [CrossRef]

- Dai, Y.M.; Wu, Y.Q.; Song, Y. Infrared small target and background separation via column-wise weighted robust principal component analysis. Infrared Phys. Technol. 2016, 77, 421–430. [Google Scholar] [CrossRef]

- Dai, Y.M.; Wu, Y.Q.; Song, Y.; Guo, J. Non-negative infrared patch-image model: Robust target-background separation via partial sum minimization of singular values. Infrared Phys. Technol. 2017, 81, 182–194. [Google Scholar] [CrossRef]

- Oh, T.H.; Kim, H.; Tai, Y.W.; Bazin, J.C.; Kweon, I.S. Partial Sum Minimization of Singular Values in RPCA for Low-Level Vision. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 145–152. [Google Scholar] [CrossRef]

- Li, M.; He, Y.-J.; Zhang, J. Small Infrared Target Detection Based on Low-Rank Representation. In Proceedings of the International Conference on Image and Graphics, Tianjin, China, 13–16 August 2015; pp. 393–401. [Google Scholar] [CrossRef]

- Liu, D.; Li, Z.; Liu, B.; Chen, W.; Liu, T.; Cao, L. Infrared small target detection in heavy sky scene clutter based on sparse representation. Infrared Phys. Technol. 2017, 85, 13–31. [Google Scholar] [CrossRef]

- Wang, X.Y.; Peng, Z.M.; Kong, D.H.; He, Y.M. Infrared Dim and Small Target Detection Based on Stable Multisubspace Learning in Heterogeneous Scene. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5481–5493. [Google Scholar] [CrossRef]

- Dai, Y.M.; Wu, Y.Q. Reweighted Infrared Patch-Tensor Model With Both Nonlocal and Local Priors for Single-Frame Small Target Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3752–3767. [Google Scholar] [CrossRef] [Green Version]

- Benedek, C.; Descombes, X.; Zerubia, J. Building Development Monitoring in Multitemporal Remotely Sensed Image Pairs with Stochastic Birth-Death Dynamics. IEEE Trans. Pattern Anal. 2012, 34, 33–50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kang, Z.; Peng, C.; Cheng, Q. Robust PCA via Nonconvex Rank Approximation. IEEE Data Min. 2015, 211–220. [Google Scholar] [CrossRef]

- Fazel, M.; Hindi, H.; Boyd, S.P. Log-det heuristic for matrix rank minimization with applications to Hankel and Euclidean distance matrices. In Proceedings of the 2003 American Control Conference, Denver, CO, USA, 4–6 June 2003; pp. 2156–2162. [Google Scholar]

- Wright, J.; Ganesh, A.; Rao, S.; Peng, Y.; Ma, Y. Robust principal component analysis: Exact recovery of corrupted low-rank matrices via convex optimization. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 7–10 December 2009; pp. 2080–2088. [Google Scholar]

- Peng, Y.; Suo, J.; Dai, Q.; Xu, W. Reweighted low-rank matrix recovery and its application in image restoration. IEEE Trans. Cybern. 2014, 44, 2418–2430. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.S.; Li, J.C.; Li, G.; Bai, J.C.; Liu, X.N. A New Model for Sparse and Low-Rank Matrix Decomposition. J. Appl. Anal. Comput. 2017, 7, 600–616. [Google Scholar] [CrossRef]

- Yuan, M.; Lin, Y. Model selection and estimation in regression with grouped variables. J. R. Stat. Soc. Stat. Methodol. Ser. B 2006, 68, 49–67. [Google Scholar] [CrossRef] [Green Version]

| Methods | Advantages | Disadvantages |

|---|---|---|

| IPI | Works well with uniform scenes. | Over-shrinks the small targets, leaving residuals in the target image, time consuming. |

| NIPPS | Works well when strong edges and non-target interferences are few. | Difficult to estimate rank of data, fails to eliminate strong edges and non-target interference. |

| ReWIPI | Works well when background changes slowly. | Sensitive to rare highlight borders, performance degrading with the increasing of complexity. |

| LRR | Works well with simple scenes. | Cannot handle complex backgrounds. |

| LRSR | Works well with homogeneous backgrounds. | Difficult to choose two dictionaries simultaneously, leaving noise in target component. |

| FBOD + GGTOD | Work well with sky background clutter. | Difficult to choose two dictionaries simultaneously, cannot handle other scenes well. |

| SMSL | Works well when the target is salient and the background is clean. | Sensitive to boundaries, poor at background suppression, loses target easily. |

| RIPT | Works well when the target is salient. | Does not work well when the targets are close to or on the boundaries, leaving much noise, loses target totally when target is not sufficiently salient. |

| Sequence | Frame Number | Size | Background Description | Target Description |

|---|---|---|---|---|

| Scene 1 (Sequence 1) | 50 | 208 × 152 | Homogenous with a highlight punctate disturbance | Small, very dim with low contrast |

| Scene 2 (Sequence 2) | 67 | 320 × 240 | Very bright, heavy noise | Moves fast with changing shape, brightness |

| Scene 3 (Sequence 3) | 52 | 128 × 128 | Sky scene with banded cloud | Tiny |

| Scene 4 (Sequence 4) | 30 | 256 × 200 | Heavy banded cloud and floccus | Small, size varies a lot |

| Scene 5 (Sequence 5) | 200 | 252 × 220 | Complex background with trees and buildings | Quite small size, changing slightly in the sequence |

| Scene 6 (Sequence 6) | 185 | 252 × 213 | Swinging plants that obscure the target frequently | Small, keeps moving in the sequence and changing brightness |

| Scene 7 | — | 320 × 240 | Sea and mountain scene with obvious artificial structure | Ship target, bright, Gaussian shape |

| Scene 8 | — | 128 × 128 | Low contrast with a lot of noise | Tiny and not so salient |

| Method | Parameters |

|---|---|

| Tophat | Structure shape: disk, structure size: 3 × 3 |

| LCM | Cell size: 3 × 3 |

| MPCM | , mean filter size: 3 × 3 |

| IPI | Patch size: 50 × 50, sliding step: 10, , |

| NIPPS | Patch size: 50 × 50, sliding step: 10, for sequence 3, ; for the rest, |

| ReWIPI | Patch size: 50 × 50, sliding step: 10, for sequence 3, ; for the rest, , |

| SMSL | Patch size: 30 × 30, sliding step: 10, , , , |

| RIPT | Patch size: 30 × 30, sliding step: 10, , , , |

| NRAM | Patch size: 50 × 50, sliding step: 10, , , , , |

| Method | Seq 1 SCRG BSF | Seq 2 SCRG BSF | Seq 3 SCRG BSF | Seq 4 SCRG BSF | Seq 5 SCRG BSF | Seq 6 SCRG BSF |

|---|---|---|---|---|---|---|

| Tophat | 4.92 | 2.09 | 1.54 | 2.29 | 3.78 | 0.53 |

| 5.55 | 10.66 | 7.55 | 8.59 | 3.00 | 0.47 | |

| LCM | 0.49 | 0.75 | 2.17 | 0.25 | 0.40 | 0.91 |

| 0.69 | 0.93 | 1.18 | 0.69 | 0.96 | 0.95 | |

| MPCM | 4.56 | 2.05 | 1.22 | 0.49 | 2.59 | 2.44 |

| 5.49 | 8.20 | 6.85 | 1.96 | 2.50 | 3.76 | |

| IPI | 7.36 | 7.64 | 2.59 | 4.24 | 2.10 | 3.25 |

| 2.25 | 2.10 | 4.46 | 5.08 | 4.29 | 3.08 | |

| NIPPS | 35.85 | 11.48 | 2.89 | 4.03 | 3.94 | 6.29 |

| 14.32 | 4.42 | 7.30 | 5.61 | 11.84 | 3.95 | |

| ReWIPI | 10.71 | 11.01 | 3.26 | 3.40 | 2.03 | 2.52 |

| 6.72 | 2.93 | 8.36 | 3.18 | 3.93 | 2.58 | |

| SMSL | — | 1.86 | 2.80 | — | — | — |

| 10.34 | 2.99 | |||||

| RIPT | — | 4.39 | 2.12 | — | — | — |

| 23.39 | 11.5 | |||||

| NRAM | 87.34 | 11.02 | 2.72 | 8.81 | 7.75 | 7.87 |

| 60.30 | 50.01 | 21.29 | 25.70 | 32.58 | 59.21 |

| Tophat | LCM | MPCM | IPI | NIPPS | ReWIPI | SMSL | RIPT | NRAM | |

|---|---|---|---|---|---|---|---|---|---|

| Complexity | O(I2logI2MN) | O(K3MN) | O(K3MN) | O(mn2) | O(mn2) | O(kmn2) | O(lmn) | O(mn2) | O(mn2) |

| Time(s) | 0.022 | 0.074 | 0.089 | 11.907 | 7.486 | 15.469 | 1.245 | 1.352 | 3.378 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Peng, L.; Zhang, T.; Cao, S.; Peng, Z. Infrared Small Target Detection via Non-Convex Rank Approximation Minimization Joint l2,1 Norm. Remote Sens. 2018, 10, 1821. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10111821

Zhang L, Peng L, Zhang T, Cao S, Peng Z. Infrared Small Target Detection via Non-Convex Rank Approximation Minimization Joint l2,1 Norm. Remote Sensing. 2018; 10(11):1821. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10111821

Chicago/Turabian StyleZhang, Landan, Lingbing Peng, Tianfang Zhang, Siying Cao, and Zhenming Peng. 2018. "Infrared Small Target Detection via Non-Convex Rank Approximation Minimization Joint l2,1 Norm" Remote Sensing 10, no. 11: 1821. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10111821