HDRANet: Hybrid Dilated Residual Attention Network for SAR Image Despeckling

Abstract

:1. Introduction

2. Related Work

2.1. SAR Speckle Noise Model

2.2. CNN Based Image Denoising/Despeckling

2.3. Attention Mechanism

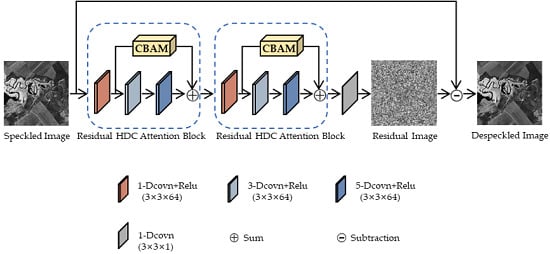

3. Proposed Method

3.1. Hybrid Dilated Convolution

3.2. Convolutional Block Attention Module

3.3. Residual Learning

4. Experiment Results

4.1. Experimental Setting

4.1.1. Training Data

4.1.2. Parameter Setting and Network Training

4.2. Quantitative Evaluations

4.3. Results on Synthetic Images

4.4. Results on Real SAR Images

5. Ablation Study

5.1. Convolutional Block Attention Module

5.2. Residual HDC Attention Block

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Argenti, F.; Lapini, A.; Bianchi, T.; Alparone, L. A tutorial on speckle reduction in synthetic aperture radar images. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–35. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.-S. Digital image enhancement and noise filtering by use of local statistics. IEEE Trans. Pattern Anal. Mach. Intell. 1980, PAMI-2, 165–168. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kuan, D.T.; Sawchuk, A.A.; Strand, T.C.; Chavel, P. Adaptive noise smoothing filter for images with signal-dependent noise. IEEE Trans. Pattern Anal. Mach. Intell. 1985, PAMI-7, 165–177. [Google Scholar] [CrossRef] [PubMed]

- Frost, V.S.; Stiles, J.A.; Shanmugan, K.S.; Holtzman, J.C. A model for radar images and its application to adaptive digital filtering of multiplicative noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, PAMI-4, 157–166. [Google Scholar] [CrossRef]

- Lopes, A.; Nezry, E.; Touzi, R.; Laur, H. Maximum a posteriori speckle filtering and first order texture models in SAR images. In Proceedings of the 10th Annual International Symposium on Geoscience and Remote Sensing, College Park, MD, USA, 20–24 May 1990; pp. 2409–2412. [Google Scholar]

- Chang, S.G.; Yu, B.; Vetterli, M. Spatially adaptive wavelet thresholding with context modeling for image denoising. IEEE Trans. Image Process. 2000, 9, 1522–1531. [Google Scholar] [CrossRef] [Green Version]

- Kalaiyarasi, M.; Saravanan, S.; Perumal, B. A survey on: De-speckling methods Of SAR image. In Proceedings of the 2016 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), Kumaracoil, India, 16–17 December 2016; pp. 54–63. [Google Scholar]

- Gleich, D. Markov Random Field Models for Non-Quadratic Regularization of Complex SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 952–961. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Motagh, M.; Akbari, V.; Mohammadimanesh, F.; Salehi, B. A Gaussian random field model for de-speckling of multi-polarized Synthetic Aperture Radar data. Adv. Space Res. 2019, 64, 64–78. [Google Scholar] [CrossRef]

- Rudin, L.; Lions, P.-L.; Osher, S. Multiplicative denoising and deblurring: Theory and algorithms. In Geometric Level Set Methods in Imaging, Vision, and Graphics; Springer: Berlin, Germany, 2003; pp. 103–119. [Google Scholar]

- Aubert, G.; Aujol, J.-F. A variational approach to removing multiplicative noise. SIAM J. Appl. Math. 2008, 68, 925–946. [Google Scholar] [CrossRef]

- Buades, A.; Coll, B.; Morel, J.-M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 60–65. [Google Scholar]

- Deledalle, C.-A.; Denis, L.; Tupin, F. Iterative weighted maximum likelihood denoising with probabilistic patch-based weights. IEEE Trans. Image Process. 2009, 18, 2661–2672. [Google Scholar] [CrossRef] [Green Version]

- Parrilli, S.; Poderico, M.; Angelino, C.V.; Verdoliva, L. A nonlocal SAR image denoising algorithm based on LLMMSE wavelet shrinkage. IEEE Trans. Geosci. Remote Sens. 2011, 50, 606–616. [Google Scholar] [CrossRef]

- Zhang, H.; Li, Y.; Jiang, Y.; Wang, P.; Shen, Q.; Shen, C. Hyperspectral Classification Based on Lightweight 3-D-CNN With Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5813–5828. [Google Scholar] [CrossRef] [Green Version]

- Fang, B.; Li, Y.; Zhang, H.; Chan, J.C.-W. Hyperspectral Images Classification Based on Dense Convolutional Networks with Spectral-Wise Attention Mechanism. Remote Sens. 2019, 11, 159. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Li, Y.; Zhang, Y.; Shen, Q. Spectral-spatial classification of hyperspectral imagery using a dual-channel convolutional neural network. Remote Sens. Lett. 2017, 8, 438–447. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the 2017 IEEE International Conference on Computer vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Wang, D.; Li, Y.; Ma, L.; Bai, Z.; Chan, J.C.-W. Going Deeper with Dense Connectedly Convolutional Neural Networks for Multispectral Pansharpening. Remote Sens. 2019, 11, 2608. [Google Scholar] [CrossRef] [Green Version]

- Danelljan, M.; Robinson, A.; Khan, F.S.; Felsberg, M. Beyond correlation filters: Learning continuous convolution operators for visual tracking. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 472–488. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y.-B. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. In Advances in Neural Information Processing Systems 29: 30th Conference on Neural Information Processing Systems, NIPS 2016; Curran Associates, Inc.: New York, NY, USA, 2017; pp. 2802–2810. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [Green Version]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [Green Version]

- Guo, S.; Yan, Z.; Zhang, K.; Zuo, W.; Zhang, L. Toward convolutional blind denoising of real photographs. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Los Angeles, CA, USA, 15–21 June 2019; pp. 1712–1722. [Google Scholar]

- Chierchia, G.; Cozzolino, D.; Poggi, G.; Verdoliva, L. SAR image despeckling through convolutional neural networks. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5438–5441. [Google Scholar]

- Wang, P.; Zhang, H.; Patel, V.M. SAR image despeckling using a convolutional neural network. IEEE Signal. Process. Lett. 2017, 24, 1763–1767. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Q.; Yuan, Q.; Li, J.; Yang, Z.; Ma, X. Learning a dilated residual network for SAR image despeckling. Remote Sens. 2018, 10, 196. [Google Scholar] [CrossRef] [Green Version]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A new fully convolutional neural network for semantic segmentation of polarimetric SAR imagery in complex land cover ecosystem. ISPRS J. Photogramm. Remote Sens. 2019, 151, 223–236. [Google Scholar] [CrossRef]

- Moreira, A. Improved multilook techniques applied to SAR and SCANSAR imagery. IEEE Trans. Geosci. Remote Sens. 1991, 29, 529–534. [Google Scholar] [CrossRef]

- Jain, V.; Seung, S. Natural image denoising with convolutional networks. In Advances in Neural Information Processing Systems 21: 22nd Conference on Neural Information Processing Systems 2008, NIPS 2008; Curran Associates, Inc.: New York, NY, USA, 2009; pp. 769–776. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Lattari, F.; Gonzalez Leon, B.; Asaro, F.; Rucci, A.; Prati, C.; Matteucci, M. Deep Learning for SAR Image Despeckling. Remote Sens. 2019, 11, 1532. [Google Scholar] [CrossRef] [Green Version]

- Jaderberg, M.; Simonyan, K.; Zisserman, A. Spatial transformer networks. In Advances in Neural Information Processing Systems 28: 29th Conference on Neural Information Processing Systems 2015, NIPS 2015; Curran Associates, Inc.: New York, NY, USA, 2016; pp. 2017–2025. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; So Kweon, I. Cbam: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.-Y.; Kweon, I.S. Bam: Bottleneck attention module. arXiv 2018, arXiv:1807.06514. [Google Scholar]

- Huang, Z.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. Ccnet: Criss-cross attention for semantic segmentation. arXiv 2018, arXiv:1811.11721. [Google Scholar]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated residual networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 472–480. [Google Scholar]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Li, Y.; Gong, H.; Feng, D.; Zhang, Y. An adaptive method of speckle reduction and feature enhancement for SAR images based on curvelet transform and particle swarm optimization. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3105–3116. [Google Scholar] [CrossRef]

| Name | Parameters |

|---|---|

| 1-Dcovn+Relu | 3 × 3 × 64, dilate = 1, padding = 1, stride = 1 |

| 3-Dcovn+Relu | 3 × 3 × 64, dilate = 3, padding = 3, stride = 1 |

| 5-Dcovn+Relu | 3 × 3 × 64, dilate = 5, padding = 5, stride = 1 |

| CBAM | Channel attention, the reduction ratio of MLP: r = 16 Spatial attention, the filter size of convolution: 7 × 7 × 1 |

| 1-Dcovn | 3 × 3 × 1, dilate = 1, padding = 1, stride = 1 |

| Image | Index | Noisy | Lee | PPB | SAR-BM3D | SAR-DRN | HDRANet |

|---|---|---|---|---|---|---|---|

| Airplane | PSNR | 11.17 | 17.23 | 19.57 | 21.32 | 23.21 | 23.36 |

| SSIM | 0.151 | 0.312 | 0.550 | 0.679 | 0.702 | 0.701 | |

| Ship | PSNR | 12.33 | 20.51 | 21.71 | 23.36 | 22.68 | 24.31 |

| SSIM | 0.161 | 0.389 | 0.536 | 0.637 | 0.654 | 0.655 | |

| Couple | PSNR | 12.72 | 20.92 | 21.34 | 23.04 | 23.69 | 25.18 |

| SSIM | 0.177 | 0.412 | 0.512 | 0.638 | 0.666 | 0.674 | |

| Bridge | PSNR | 13.27 | 20.15 | 19.80 | 21.42 | 22.64 | 22.83 |

| SSIM | 0.261 | 0.500 | 0.400 | 0.553 | 0.586 | 0.586 | |

| Flowers | PSNR | 15.12 | 22.23 | 21.44 | 23.35 | 25.02 | 25.14 |

| SSIM | 0.303 | 0.593 | 0.609 | 0.725 | 0.754 | 0.754 | |

| Foreman | PSNR | 11.45 | 17.25 | 19.70 | 21.19 | 25.32 | 26.16 |

| SSIM | 0.101 | 0.312 | 0.644 | 0.747 | 0.784 | 0.780 | |

| Average | PSNR | 12.68 | 19.72 | 20.59 | 22.28 | 23.76 | 24.50 |

| SSIM | 0.192 | 0.420 | 0.542 | 0.663 | 0.691 | 0.692 |

| Image | Index | Noisy | Lee | PPB | SAR-BM3D | SAR-DRN | HDRANet |

|---|---|---|---|---|---|---|---|

| Airplane | PSNR | 13.53 | 20.33 | 21.67 | 23.87 | 24.61 | 24.96 |

| SSIM | 0.216 | 0.413 | 0.638 | 0.747 | 0.758 | 0.760 | |

| Ship | PSNR | 14.69 | 23.01 | 23.97 | 25.53 | 24.63 | 27.17 |

| SSIM | 0.233 | 0.486 | 0.613 | 0.698 | 0.708 | 0.709 | |

| Couple | PSNR | 15.21 | 23.36 | 23.65 | 25.67 | 26.01 | 26.19 |

| SSIM | 0.258 | 0.521 | 0.606 | 0.725 | 0.740 | 0.738 | |

| Bridge | PSNR | 15.79 | 22.20 | 21.60 | 23.32 | 24.06 | 23.91 |

| SSIM | 0.379 | 0.591 | 0.509 | 0.656 | 0.671 | 0.675 | |

| Flowers | PSNR | 17.69 | 24.34 | 24.03 | 25.47 | 26.28 | 26.88 |

| SSIM | 0.418 | 0.691 | 0.717 | 0.797 | 0.812 | 0.815 | |

| Foreman | PSNR | 14.02 | 20.48 | 22.56 | 24.41 | 27.24 | 28.30 |

| SSIM | 0.158 | 0.436 | 0.730 | 0.812 | 0.824 | 0.826 | |

| Average | PSNR | 15.16 | 22.29 | 22.91 | 24.71 | 25.47 | 26.24 |

| SSIM | 0.277 | 0.523 | 0.636 | 0.739 | 0.752 | 0.754 |

| Image | Index | Noisy | Lee | PPB | SAR-BM3D | SAR-DRN | HDRANet |

|---|---|---|---|---|---|---|---|

| Airplane | PSNR | 15.90 | 22.84 | 24.09 | 25.92 | 26.50 | 26.51 |

| SSIM | 0.286 | 0.505 | 0.718 | 0.800 | 0.809 | 0.809 | |

| Ship | PSNR | 17.30 | 25.05 | 26.23 | 27.67 | 28.36 | 28.57 |

| SSIM | 0.320 | 0.581 | 0.682 | 0.753 | 0.762 | 0.760 | |

| Couple | PSNR | 17.86 | 25.41 | 25.99 | 27.80 | 27.37 | 27.91 |

| SSIM | 0.353 | 0.618 | 0.696 | 0.784 | 0.793 | 0.795 | |

| Bridge | PSNR | 18.48 | 23.82 | 23.50 | 25.13 | 24.43 | 25.81 |

| SSIM | 0.509 | 0.666 | 0.618 | 0.748 | 0.747 | 0.755 | |

| Flowers | PSNR | 20.40 | 25.99 | 26.30 | 27.39 | 27.52 | 27.49 |

| SSIM | 0.535 | 0.763 | 0.795 | 0.847 | 0.854 | 0.855 | |

| Foreman | PSNR | 16.65 | 23.52 | 25.98 | 27.47 | 29.38 | 29.52 |

| SSIM | 0.233 | 0.551 | 0.807 | 0.855 | 0.857 | 0.855 | |

| Average | PSNR | 17.77 | 24.44 | 25.35 | 26.90 | 27.26 | 27.64 |

| SSIM | 0.373 | 0.614 | 0.719 | 0.798 | 0.804 | 0.805 |

| Image | Area | Noisy | Lee | PPB | SAR-BM3D | SAR-DRN | HDRANet |

|---|---|---|---|---|---|---|---|

| Horsetrack | A | 18.86 | 95.48 | 1163.52 | 408.50 | 1396.85 | 1415.08 |

| B | 15.69 | 95.33 | 940.78 | 338.51 | 807.16 | 944.62 | |

| Volgograd | A | 24.58 | 80.61 | 336.19 | 197.16 | 389.74 | 508.59 |

| B | 19.38 | 121.26 | 1276.60 | 751.99 | 1500.98 | 1659.79 | |

| Noerdlingen | A | 18.52 | 107.32 | 2143.67 | 505.04 | 1735.32 | 2193.07 |

| B | 12.21 | 37.09 | 75.49 | 43.86 | 60.66 | 76.50 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Li, Y.; Xiao, Y.; Bai, Y. HDRANet: Hybrid Dilated Residual Attention Network for SAR Image Despeckling. Remote Sens. 2019, 11, 2921. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11242921

Li J, Li Y, Xiao Y, Bai Y. HDRANet: Hybrid Dilated Residual Attention Network for SAR Image Despeckling. Remote Sensing. 2019; 11(24):2921. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11242921

Chicago/Turabian StyleLi, Jingyu, Ying Li, Yayuan Xiao, and Yunpeng Bai. 2019. "HDRANet: Hybrid Dilated Residual Attention Network for SAR Image Despeckling" Remote Sensing 11, no. 24: 2921. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11242921