1. Introduction

The automated interpretation of building environments is a major topic in the current literature [

1]. Where modelers used to manually identify building components such as structural elements and furniture, more unsupervised workflows are proposed for scene interpretation [

2]. Applications such as Scan-to-BIM and indoor navigation of autonomous robots heavily rely on rapid scene interpretation, making its automation highly desired by the industry [

3].

The interpretation of building environments is typically performed on a set of observations such as imagery or point clouds. From these inputs, a set of relevant features is extracted which is then used to classify the observations [

4]. Generally, structural elements such as walls and slabs are identified first after which domain-specific components are detected. Also, because structural elements have more distinct geometry than visual characteristics, the classification is typically performed on the metric data [

5]. Currently, researchers have nearly exclusively worked on point cloud data acquired from Terrestrial Laser Scanning (TLS), Indoor Mobile Mapping Systems (IMMS) or RGBD sensors [

6]. However, researchers have reported that the noise, occlusions, uneven data distribution and sparsity of point clouds can lead to severe misclassifications [

4,

7,

8]. Alternatively, mesh geometry is becoming more accessible for building geometry due to the recent advancements in speed, correctness and detailing of meshing operations [

9]. Meshes are not hindered by the above obstacles in point cloud data and thus could potentially improve the detection rate [

10]. However, researchers typically generate features from their raw input data and overlook the geometry representation opportunities despite their obvious impact on the feature extraction. As a result, there currently is a gap in the literature investigating the impact of geometry representations on the feature extraction in building environments. The few research papers that do actually compare features mostly focus on the impact of features on the result without considering the geometry representation [

11,

12,

13]. In this research, we specifically target the comparison of mesh segments to point cloud segments, providing a much-needed empirical study for building interpretation. We extend on the unsupervised segmentation frameworks proposed in [

14,

15] for both point cloud and 3D mesh geometries. In summary, the method’s main contributions are:

A theoretical comparison between mesh-based and point-based geometries for classification

An empirical study that compares both approaches in terms of feature discriminativeness, distinctness and classification results

An implementation of frequently used building classification features and the validation on the Stanford 2D-3D-Semantics Dataset (2D-3D-S).

The remainder of this work is structured as follows. The background and related work is presented in

Section 2. In

Section 3 we propose a methodology for extracting pertinent features designed for efficient classification. The workbench design and experimental results are given in

Section 4 and discussed in

Section 5 and

Section 6. Finally, major conclusions are presented in

Section 7.

2. Background and Related Work

The typical procedure to interpret building geometry is to first acquire a set of relevant observations, followed by one or more preprocessing steps to transform the raw data to a set of useful inputs i.e., mesh segments or voxel octrees. Next, a set of features is extracted from every observation which is then fed to a classification model that computes the most adequate class label. The geometry definitions, feature extraction and classification state of the art are discussed below.

2.1. Geometry Representations

Both point cloud data and meshes have different geometry representations depending on the type of data acquisition system and algorithm that is used to generate them. For point cloud data, there are the structured and unstructured databases. The representation and processing of the former is very efficient due to its structure, making it highly desired by navigation and interaction applications [

16,

17]. A major advantage of these raw data structures is the inherent sensor information that is present in the point cloud. The relative location and orientation of the sensor is known in relation to the points and thus this information can be used for improved scene interpretation and for the feature extraction [

18,

19,

20]. However, the feature extraction is contained per setup location, which severely limits the amount of information (and thus features) that can be extracted from the scene. These approaches are also bound to a specific sensor which limits the applicability.

In contrast, there are unstructured point clouds which represent the entire scene as an arbitrary collection of Cartesian points. As this is not efficient for processing, these points are also structured with octrees [

21,

22] or hybrid indexing methodologies [

23]. Initial octrees had a limited voxel depth due to the dimensionality

of the nodes but recent advancements in computer graphics such as kd-trees and sparse voxel octrees can efficiently represent the full point cloud [

24,

25]. As a result, the entire point cloud can be simultaneously assessed which a popular approach in the literature [

26,

27]. The generation of these octrees is computationally demanding, but since it is a one-time operation, researchers have found it to be highly efficient. An interesting property of these structures is that the voxels themselves can also be used as the base input for the feature extraction as presented by Poux et al. [

14]. Also, the hierarchical structure of the data tree is used to compute features for multiple voxel sizes, which is a trade-off between computational efficiency and the loss of information. This approach is becoming increasingly popular with researchers such as Yang et al. [

28], Wang et al. [

29], Riggio et al. [

30] and Dimitrov et al. [

31] all exploiting the construct of the octree to initially compute coarse features from the point cloud before fine-tuning the results using the full resolution of the point cloud.

Aside from point clouds and related data structures, researchers also rely on mesh geometry as the base input for scene interpretation. A triangle or quad mesh is a surface representation which is calculated from the point cloud by sampling or computing a set of vertices and defining faces in between these points. It is a notably different geometric data representation compared to the point cloud since a mesh is a construct that deals with noise and occlusions and makes assumptions about the surface of an object in between the observed points. Any geometric features extracted from a mesh are therefore inherently different from those extracted from a point cloud which will have an impact on the scene interpretation. The literature presents several ways to compute mesh geometry including variations of Poisson, Voronoi and Delaunay concepts [

32,

33]. The driving factors of a mesh operation are its robustness to noise and occlusions, speed, detailing and data reduction. Speed and detailing are inversely proportional and so are detailing and data reduction. A careful balance is to be retained between detailing, noise reduction and the number of vertices/faces which is a prominent factor in the computational cost of the feature extraction. In recent years, research in computer graphics have made spectacular advancements, with methods capable of producing a realistic and accurate mesh with less than 1% of the points in a matter of minutes [

9,

34]. There have also been significant advancements in mesh connectivity and the creation of manifold meshes [

35]. A major advantage of photogrammetric meshes is that the geometry can be texturized using the imagery which allows for the incorporation of computer vision features which has tremendous potential towards data association tasks [

36]. However, analog to the raster data, image information is not always present along with the point cloud and thus it is not considered in this research.

There are several important differences between meshes and point clouds for the feature extraction. A first aspect is the number of observations that must be processed. Less observations indicates less computational costs, and thus potentially more pertinent feature descriptors. A second aspect is the normal estimation of the points/faces that lies at the base of many geometric features. Meshes are continuous smooth surfaces and thus have more reliable normal estimation procedures [

37] compared to point clouds, especially in the vicinity of edges. A third aspect is the data distribution. A point cloud’s density is typically a function of the distance between an object’s surface and the sensor while the density of a mesh is a function of the surface detailing. As a result, the point cloud density will be low in variance and more arbitrary while meshes will consistently have lower density in flat areas, higher density near curvatures and will have a higher variance. This difference in distribution impacts the nearest neighbor searches in both datasets but if done properly with adaptive search radii [

38] or adjacency matrices, this does not impact the feature extraction.

2.2. Segmentation

Prior to the classification, researchers typically conduct a segmentation of the geometry to increase the discriminative properties of the features and to reduce the number of observations. The voxel octree or mesh is partitioned into a set of segments based on some separation criteria i.e., property similarity, model conformity or associativity. In recent years, numerous methods have been proposed for efficient point cloud segmentation including region growing, edge detection, model-based methods, machine learning techniques and graphical models [

14,

39,

40,

41,

42,

43,

44,

45]. Similar to meshing, speed and detailing are inversely proportional in these procedures. The type of segmentation has a significant impact on the feature extraction. Not only do the feature values change in function of the segment’s size, the context also changes based on the hypotheses of the segmentation. There are segmentation methods that produce supervoxels based on similar property values purely to reduce the number of observations without altering the context [

46,

47,

48,

49,

50,

51]. In contrast, segmentation methods partition the data according to geometric primitives e.g., planes and cylinders [

52,

53]. For structural building element detection, planar or smooth segments are the most frequently used primitives since most of the scene corresponds well to this model [

5,

54,

55,

56].

In addition to the segment type, there are several other important differences between mesh-based and point-based segments that impact the feature extraction. A first aspect is the inclusion of noise. Meshing operations actively deal with noise while segmentation operations assign points to separate clusters [

57]. As a result, point-based segments typically contain more noise than mesh-based segments which impacts the feature extraction. A second aspect is the topology between neighboring segments. Recent meshing operations carefully reconstruct edges and produce properly connected geometry [

9]. Neighboring segments are thus connected which improves the contextual features. A third aspect again is the data distribution between point cloud and mesh segments. Because of the large differences in face sizes in a mesh segment, the feature extraction should be weighted by the face sizes to compute the appropriate feature values.

2.3. Feature Extraction

There are numerous geometric features that can be extracted from point clouds and meshes, ranging from heuristic descriptors to deep-learning information. First, there are the local and contextual features describing the shape of the objects [

4]. The most popular local features are based on the eigenvalues

and the eigenvectors

of the covariance matrix of a support radius, which in segment-based classification are the faces or points inside the segment [

12,

58,

59]. Recent approaches compute this from a weighted PCA with the geometric median which has proven to outperform the sample mean [

7]. The left column in

Table 1 shows the different 3D descriptors that most classification approaches use. In this work, we do not consider hierarchical local features such as defined by Wang et al. [

60] and Zhu et al. [

61] since these typically target supervoxels instead of segment-based classification.

In addition to these transformation invariant features, it is common in building component detection to compute several absolute metrics from the segments. Popular features include the segment’s orientation in relation to the gravity or Z-axis, surface area, dimensions and the aspect ratio [

4,

5,

11,

62,

63,

64,

65]. The most frequently used features are summarized in the right column of

Table 1. Notably, most features are significantly correlated, hence the need for a proper feature weight estimation. Also, these features impose several assumptions about the appearance as structural elements. Some researchers go as far to impose Manhattan-world assumptions on the input data, thus only considering features that target orthogonally constructed elements in a regular layout [

53,

55,

66,

67,

68]. However, this does not coincide with the scope of this research to study the effects of geometry on general detection approaches. More advanced features e.g., HOG and SHOT are also proposed but these features are more suited for object recognition than the semantic labeling of generic structural element classes such as floor, ceilings and walls [

69,

70,

71,

72].

For datasets of interior building environments, shape features alone are typically not sufficiently discriminative to reliably compute the class labels. Therefore, proximity and topology features are also proposed which describe the relation between the observed segment and its surroundings. These features test for frequently occurring object configurations in building environments. An important factor with these features is the selection of the neighbor reference group. Aside from k-nearest neighbors, attribute-specific reference groups can be used to test for certain topological relations i.e., whether there is a large horizontal segment located underneath a vertical segment that could indicate a floor-wall relation. As such, several class-to-class configurations can be encoded based on building logic [

8]. While the above-defined relations are man-made descriptors, it is becoming increasingly popular to generate contextual information by convoluting local features in neural networks. This shows very promising results in computer vision and the classification of rasterized data [

73,

74] since these systems are designed to detect patterns in low-level information inputs (like RGBD imagery). However, its full potential remains to be unlocked for segment-based classification since current neural networks require a static set of inputs which is inherently different from the joint meshes or point clouds of a building. As such, current methods feed man-made features as a static input to the networks which is the same approach as other classification models.

An interesting strategy is to define the contextual features as pairwise or higher order potentials as part of a probabilistic graphical model such as a Conditional Random Field [

75]. Instead of encoding the features as unary potentials that only contribute to the label estimation of the observation itself, CRFs define the conditional probability of the class labels over all the segments. As such, not only do the shape and contextual relations but also the probability of the class labels themselves contribute to the estimation of the best fit configuration of the class labels or the most likely class of each segment individually [

76]. This approach has been used in several studies with promising results e.g., [

70,

77,

78,

79,

80] all use CRFs with promising results. However, these constructs are ideally fit to exploit label associativity between neighboring segments which is often the case in supervoxels but not for planar segments that already exploit much of this information during the segmentation step [

19,

81]. We therefore do not consider these features in this research but rather focus on the commonly used shape, contextual and topology features in building environments.

In addition to geometric features, the radiometric properties of the remote sensing data can be exploited i.e., RGB, infrared and even multi-spectral features [

82]. This is extensive field of research in image-based classification [

83]. Additionally, the Signal-to-Noise ratio (SNR) of LiDAR systems can be used as it is significantly influenced by the target object’s material properties such as reflectivity [

84]. Both point cloud data and mesh data have access to this information depending on the sensors that were used to capture the base inputs. Overall, meshes can store this information with a higher resolution as the full resolution of the inputs can be projected onto the mesh faces.

2.4. Comparison of Feature Extractions

We build on several important works to compare the effects of geometric features. First, there is the study by Weinmann et al. [

12,

85] and Dittrich et al. [

86] in which the issue is addressed of how to increase the distinctiveness of geometric features and select the most relevant ones for point cloud classification. It is stated that point cloud features and class characteristics have significant variance which require robust classification models such as Random Forests (RF) [

87] or Bagged Trees. We also confirm this in our previous research [

8,

88] and thus in this work we will also use Bagged trees to test the features of the inputs. A second study that is very relevant to this work is from Dong et al. [

11] who study the selection of LiDAR geometric features with adaptive neighborhoods for classification. Along with Garstka et al. [

13] and Dahlke et al. [

89] they also show that the neighborhood selection has a significant impact on the feature performance. As this is inherently different for both data presentation, we propose to focus the comparison on the segment-based features since these segments share the common building logic by which they are obtained.

Most approaches currently employ the point cloud as the basis for the geometry features despite several studies showing that the lack of consistent point distributions, holes and noise has a significant impact on the feature extraction. Lin et al. [

7] partially compensate this by introducing weighted covariance matrices which are a non-issue for mesh geometry. The same applies to Koppula et al. [

90] who attempt to smooth the normal estimation in the presence of noise and edges. Mesh geometry descriptors do not need such compensation and have proven to produce at least as promising results as their point cloud counterparts [

72,

91]. However, a rigorous comparison of both geometry representations is currently absent in the literature. Indeed, meshing can be seen as a viable preprocessing step which lowers the computational cost of the segmentation, feature extraction and classification while simultaneously increasing the feature discriminativeness and detection rate.

3. Active Methodology

The proposed methodology aims at extracting insights on the potential of feature representation for two widely used geometric data representation: 3D Point Clouds and 3D meshes. More specifically, we elaborate on the potential features of both geometries as these are the two most commonly employed geometry types in the literature. In the paragraphs below, we study both the local and contextual features that researchers can use for scene interpretation, along with their advantages and disadvantages.

3.1. Unsupervised Segmentation

Numerous segmentation algorithms are proposed in the literature (

Section 2) including iterative region-growing, model-based methods such as RANSAC, Conditional Random Fields and Neural Networks. A prominent factor is the choice of region type to segment. Mostly planar regions are preferred for building interpretation since these typically correspond well to the planarity of the structural elements of a building. In this research, we consider these flexible regions as the inputs for both the point cloud and the mesh-based classification because of their versatility to represent building geometry (

Figure 1 and

Figure 2).

The main difference is the parameter supervision for the mesh segmentation, whereas the approach proposed by Poux et al. [

14] chosen for the point cloud segmentation is fully unsupervised. Thus, from raw data to segments, the point cloud is preferred at this step for full automation. Both datasets are pre-segmented into regions with similar properties. The meshes were processed by an efficient region-growing algorithm developed in previous work [

93] that extracted smooth surfaces. The point clouds were clustered in an unsupervised fashion following the work of Poux et al. [

14].

3.2. Segment-Based Feature Extraction

The obtained segments constitute the base for the feature extraction. These are referred to as region-based or segment-based features similar to [

43] and obtain more distinct features. Three types of features are used in this research i.e., shape, proximity and topology features. Each type is explained below.

3.2.1. Shape Features

Shape features encode information about the segment itself and its surroundings. As such, it includes both local and contextual information. The local characteristics such as the surface area are an important queue for the presence of structural elements. Also, the dimensions and the aspect ratio give information concerning the shape of the object [

4] e.g., long slender segments have a higher probability of being a beam. In indoor datasets, local features alone generally do not have sufficient discriminative power to determine the class labels. Therefore, contextual features are also included which describe the attributes of a cluster with respect to its surroundings. Typically, relationships are established with nearby neighbors of the observation. However, higher order neighbors may also be used along with specific reference groups.

Table 2 provides the mathematical notation and graphical overview of the shape features used in the experiments.

3.2.2. Proximity Features

These continuous metrics describe the distance of

to specific reference observation

r in the structure that is likely to belong to a certain structural class. For instance, the distance from

to the border of the nearest large vertical segment is an indicator for a floor-wall or floor-ceiling configuration. In addition to the size, reference segments are defined based on their orientation and proximity with respect to

. The concepts formalized in

Table 3 correspond to the following intuitive definitions:

Distance to the nearest vertical segment: Structural elements typically have their borders near large non-horizontal segments such as the edges of walls and beams. As such, this distance between and a reference segment r differentiates structural from non-structural observations.

Vertical distance to the nearest horizontal segment above: Analog to the feature above, structural elements are more likely to have their borders near large near-horizontal segments such as floors and ceilings. Together with the feature below, these values are important to identify large non-structural observations which are prone to mislabeling due to their feature associativity to structural classes. Instances such as blackboards, closets and machinery are less likely to connect to a ceiling opposed to wall geometry.

Vertical distance to the nearest horizontal segment underneath: This feature supports the above descriptor as structural objects are typically supported underneath.

Average distance to R nearest large segments: This feature encodes the characteristic of structural elements which are more likely to be connected to multiple significantly large observations. As they are part of the structure, they are less likely to be isolated from other structural elements. The average distance to large observations serves as a measure for this characteristic.

3.2.3. Topology Features

Several topological relations are also evaluated. These features encode the presence of reoccurring object configurations within the vicinity of the observed surface. Although they have poor classification capabilities by themselves, these Boolean predictors are valuable discriminative features between classes e.g., a horizontal segment is more likely to be a ceiling when there are horizontal segments directly above it. Also, the presence and location of clutter is considered. Based on the expected occlusions of the different object classes, the presence of clutter is used to identify objects. For instance, a floor is expected to occlude objects underneath it. This information is typically extracted from the sensor’s position [

4] but as previously discussed, we exclude this information for generalization purposes. Five topological relations are observed of which the mathematical representation is given in

Table 4. These correspond to the following intuitive definitions:

Number of connected segments: This feature targets the identification of clutter and non-structural observations as it makes up over 90% of building interiors. Much like spatial filters used in point cloud cleanup methods, segments with no direct neighbors are less likely to belong to a structural object class.

Unobstructed nearby segment in front or behind: This feature observes the relation between and a large nearby segment r with a similar orientation. If the pair is unobstructed i.e., no segments are located in between, this implies that the space between and r is a void, and thus and are likely to belong to the same class e.g., a portion of both sides of a wall face. Moreover, it is an important feature to detect large segments of objects of no interest as these are more likely to be surrounded by clutter and thus have no such unobstructed relations.

Presence of a large nearby segment above: This feature (together with the features below) observes the configuration of potential ceilings, floor and roof segments. For instance, in a fully documented building, it is likely that floors overlay ceilings.

Presence of a large nearby segment below: Complementary to the feature above, the presence of a segment below increases the likelihood of to belong to a floor or roof.

No presence of any segments above: This feature specifically is a discriminative property of roof segments that typically do not have any overlaying segments. As this does also apply to floor surfaces on a building’s exterior, this feature is also dependent on other characteristics.

Please note that some of the contextual and topology features do not apply to certain . e.g., the feature observing the presence of any segments above only applies to non-vertical segments. For these features, the value is automatically returned as the non-associative value to avoid false positives. Additionally, the feature values of all are normalized and mapped on a Gaussian distribution with a maximum score of 1 to allow for a better division of the feature space and to smooth out extreme feature values.

3.3. Compatibility to Instance Segmentation

Following the segment-based feature extraction presented in

Section 3.2, our method proposes an empirical study to compare the achievable classification performance using shape, contextual and topology features from both the point cloud data and mesh data. As we reason at the segment level, we propose to simply represent each segment from the point cloud data by its associated convex-hull geometry. It is to note that other representations such as alpha shape provide a better representativity but can put additional calculation load as well as parameters inclusion that can hurt the unsupervised framework. Given a realistic benchmark dataset, a classification model is trained and tested using a flattened segment-based feature set for both point cloud and mesh datasets, as illustrated in

Figure 3.

In the experiment, each observation is assigned one of class labels given their shape, contextual and topology feature vectors . We specifically target the structural building element classes of a building i.e., the walls, floors, ceilings and beams. These are essential classes that that are present in nearly every building and can be used for further scene interpretation. In the following sections, a detailed implementation along with the results is given.

4. Experiments

The performance of both geometries is tested on three aspects. First, an empirical study is conducted of the feature extraction in terms of distinctness and discriminativeness for the different classes. Second, a general classification test is conducted. For each geometry type, a machine learning classifier was pre-trained using test data from previous work [

8] and validated on the same benchmark data. Third, a visual inspection is conducted on the test results to give a more in-depth overview of the classification differences between both methods.

4.1. Feature Analysis

The feature analysis is conducted using the shape features from

Table 2. These features are selected for two reasons. First, shape features are robust descriptors for a segment’s geometry and will be impacted the most by changes in the shape of the segments. Second, these features give a proper overview of the feature discriminativeness for the different classes. Notably, the topology and proximity features are not considered in this section as these are more nuanced features which do not properly reflect the geometric differences between the segments. Additionally, these features are better suited for multi-story buildings and thus do not represent the distribution of the 2D-3D-S dataset.

For every feature, a boxplot is generated per class for both methods based on the above established segments and the ground truth labels. The median and interquartile range (IQR) of each boxplot serve as the primary statistic to evaluate the feature distinctness. Additionally, the overlapping coefficient (OVL) of the IQR between the different classes serve the main indicator for the feature discriminative power. For instance, a feature with a different median and a small IQR for every class is considered a more distinct feature, and will therefore positively impact the classification. e.g., the surface area of segments labeled as “other” are systematically smaller than segments belonging to structural classes. In contrast, features with overlapping IQRs between classes have a lower discriminativeness which can lead to misclassifications. e.g., the height of both floors and ceilings are similar, and thus a proper separation cannot be made between both classes for this feature. Please note that not every feature is equally distinct for every class nor should this be the case. A properly trained classification model assigns weights to different features for every class. Also, a normal distribution is not always the best representation of the data, as can be derived from the skew ( vs ) of the boxplots. For instance, beams, which can be horizontal or vertical, are significantly skewed and have a larger feature dispersion because of their class definition. However, this is not an issue as there are classification models which allow multiple feature value intervals to be associated with a class. Overall, each class that has at least several distinct and discriminative features will be properly identified.

4.2. Classification Test

To evaluate the impact of the feature value discrepancy, both datasets are classified by a pre-trained Bagged Trees classifier which has proven to be very well suited to deal with building geometry observations. The MATLAB Bagged Trees algorithm is implemented for both approaches similar to Munoz et al. [

94], Vosselman et al. [

94] and Niemeyer et al. [

76]. The training data, which was acquired in previous work [

8], is copied into

M different bootstrapped datasets along with a subset of the feature variables. For every subset, a discriminative decision tree is trained. The final classification model consists of the aggregate of the decision trees. New observations are then classified by the majority vote over the trained decision trees.

4.3. Visual Inspection

The visual inspection is conducted on the final classification of both datasets. First, both geometries are metrically compared to each other. The Euclidean distance is determined between both datasets and the deviation metrics are evaluated. Next, a visual study is conducted of the classification of both datasets. Several callouts are made of the different areas of the Stanford dataset and compared for both methods. The target of the visual inspection is to confirm the metrics of the classification tests and to evaluate false positives.

5. Results

The experiments are conducted on the Stanford 2D-3D-Semantics Dataset (2D-3D-S) [

92]. It contains 6 large indoor spaces of 3 buildings on the Stanford campus. It was captured using the Matterport Gen1 RGB-D sensor resulting in a point cloud with 695,878,620 points linked to 6 meshes with a total of 648,698 faces. Each dataset is composed of the raw 3D point clouds, the extracted mesh geometry and the semantic labels. The mesh and point clouds serve as the main input to the interpretation pipeline while the semantic labels serve as the ground truth for the classification. Overall, the datasets pose a challenging test for classification procedures. The environments were captured under realistic conditions and contain significant clutter, occlusions and many different instances of each class. The point clouds were manually annotated per area by [

92] with a wide variety of classes, which in this experiment are restructured into ceiling, floor, wall, beam and other (including all the furniture classes, the windows and the doors).

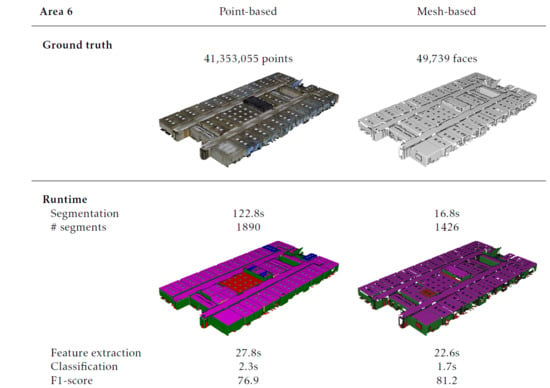

Prior to the tests, each dataset is segmented into smooth planar segments as shown in

Table 5 and

Figure 4,

Figure 5 and

Figure 6. Both datasets were segmented with similar parameters to achieve a proper segmentation of the building interiors.

Table 5 depicts the number of segments that was created for each class. Overall, proper segments are extracted from both datasets. However, there are several differences between both procedures. First, the processing times of the point clouds are significantly higher than those of the meshes. This is expected since the meshing (of which the processing time is not included here) reduces the number of observations by over 90% compared to the initial point cloud. Second, the number of segments created from the point clouds are significantly higher than with the meshes due to the increased noise, observations and holes. Floors are an exception to this as these segments contain little curvature and detailing and are less obscured by other objects in comparison to other object types. These differences impact the processing time of the segmentation and the classification of the point clouds. However, this is counter-balanced by the fact that the point cloud does not necessitate any preprocessing step to transform it into a mesh, which is often a very time-consuming process. The resulting point-based and mesh-based segments

S are the main input for the feature extraction, the classification and the visual inspection.

The results of the feature evaluation are listed in

Table 6 and

Table 7. It is observed that several features take on extreme values which is expected as the feature extraction is not normally distributed and focus on discriminativeness. The IQRs of both methods have little overlap between classes and the IQRs themselves have limited ranges for most classes. For instance, observations of walls and ceilings are systematically larger and have higher normal similarity than observations of clutter which is marked by randomness. When combined, these features are also useful to identify error prone segments such as doors, which have a distinct aspect ratio (height and width). Based on the boxplots, it is expected that both approaches will provide promising classification results. Despite their individual performance, there are significant differences between the feature values of both methods due to the differences between the point-based and the mesh-based segments. First, the median, IQR and skew of each class significantly deviate depending on the feature.

Table 7 reports maximum feature score deviations of 0.17 for the median, 39% for the average skew and 0.19 for the IQR. As a result, there are differences in OVL of up to 0.23 between the classes and only 0.01 OVL between the methods as a whole. These differences are caused by the segment’s shape as well as by their context. For instance, the surface area feature reveals that mesh-based segments on average score 0.13 higher than their point-based counterparts indicating an increased size. This significantly affects the number of segments created (which is up to 2 times higher for the point clouds) which in turn impacts the IQR of each class. On average, the point-based segments generate 0.19 larger IQRs than the mesh-based segments, which can affect the classification. While these differences vary depending on the feature,

Table 7 shows that there are systematic deviations between the segments of both methods. Overall, the IQRs of mesh-based segments are 0.07 smaller and have 0.08 less overlap, making them respectively more distinct and discriminative.

Table 8 shows the result of the classification and

Table 4,

Table 5 and

Table 6 show the processing times. Each column in

Table 8 depicts the F1 score for each method for the different classes. Overall, both methods were able to classify the segments generated on the in total 300 million points and 700,000 meshes. The average F1 score for the point-based and the mesh-based method are respectively 75.9% and 75.5%, which is promising considering the obstacles of the benchmark data and that the data was solely used for validation and not training. Both F1 scores are similar which indicates that the classification model was properly trained for the point-based segments despite the discrepancy in feature values. This can be attributed to the Bagged Trees capacity to deal with high variance datasets such as building environments. However, the F1 scores in

Table 8 only provide the overall statistics of the classification, of which the clutter is a significant part. To make an in-depth comparison between both methods, a visual inspection is required.

The results of the visual inspection are shown in

Figure 7. The maximal deviation of the mesh geometry is 13 cm from the point cloud, but on average, the standard deviation is under 2.5 cm

Figure 8. Concerning structural elements, this error is less than 1 cm, due to their planar shape. However, we note that the meshing is prone to providing incomplete geometries due to the partial uneven sampling of the point geometry, which results in less triangles but more reliability of the mesh surface. We can see that both methods perform well on the varying scenarios and challenges posed by the datasets. The first thing to note, is that the point cloud presents an overall higher consistency due to “complete” nature of the spatial representation. Its mesh counterpart, as highlighted in the previous section, is less complete, but the accuracy of the classification is not highly impacted. The second thing to note, is that both methods largely agree for the main parts (walls, ceilings, floors), and the differences are mainly noticeable for elements connected to structural classes such as the clutter, bookcases and tables as illustrated in Diff1 from

Figure 7. We also note that some beam elements are identified in the mesh modality whereas it is classified as wall in the point cloud dataset. Finally, we note that independent objects with a clear distinctiveness from wall elements (e.g., chairs) are correctly handled in both datasets with very little visual difference between modalities.

6. Discussion

In this section, the pros and cons of the point-based and the mesh-based approach are discussed. First, we study the impact of the geometry on the feature extraction between the point-based and the mesh-based method. A first major difference to discuss is that the mesh-based segments have smoother geometries with less noise, less holes and thus is more consistently connected to other segments. As a result, mesh segments are typically larger with more distinct local and contextual information. The experiments clearly show that meshing can positively impacts the feature extraction and leads to more discriminative shape features. In contrast, the point-based segments better represent the raw information of the point cloud without interpolation. While these segments contain noise or are affected by occlusions, they are not prone to potential errors that plague meshing operations, and are better suited for unsupervised workflows with minimal serialized breaking points. Instances such as falsely connected segments, falsely closed holes, non-manifold mesh faces and oversimplification of the scene can have a negative impact on the feature extraction and the subsequent classification. It is argued that with each added step to the process, additional errors are injected thus working close to the point clouds is recommended in this case. However, if the meshing is performed appropriately, more distinct and discriminative features values can be extracted from the segments.

The second difference between both methods is the impact on the classification results. To this end, one should consider both the F1 scores of the classification and the visual inspection of the different areas in the 2D-3D-S dataset. The experiments reveal that both geometric modalities deliver over 75% F1-scores. However, the metrics are nuanced as there is a major difference between small and large segments. The visual inspection in

Figure 7 shows that the classification of the larger segments in the scene is near-identical. Therefore, the difference in F1 scores is mainly due to smaller segments where noise, occlusions and differences in surface area have a much higher impact. As these segments only make up a small portion of the total surface area of the scene, their significance is very limited. However, there are some occurrences of larger segments that are misclassified. For instance, some doors that were identified in the mesh-based classification are labeled as walls in the point-based classification. These errors are critical as they lead to an excess of walls in the project. However, it is revealed that despite the mesh-based approach properly identifying more of these occurrences, it still produces some of the same false positives as the point-based segment approach. For instance, the built-in closet in

Figure 7 is misclassified by both methods due to its geometric similarity to a wall. This showcases the limitations of geometry-based classification tasks which are complementary to computer vision classification.

In summary, both methods are very capable of properly interpreting the majority of building environments through the proposed feature set. While meshing does improve the feature extraction and classification performance, one should consider the gain of adding such a preprocessing layer (meshing operation) for offline processes (which mesh-based classification are stuck to). As classification score performance goes, no distinct recommendation is emitted, as both obtain good scores under 1% difference.

7. Conclusions

This paper presents an overview of the impact of geometry representations on the classification of building components. More specifically, a comparison is made between point clouds and meshes which are the two most common geometry inputs for classification procedures. The goal is to study the differences between the two geometry types with respect to the segmentation, feature extraction and the classification. It is hypothesized that the difference in noise, holes, size and the amount of data can impact these procedures and by extent the success rate of geometry interpretation tasks. Through this study, developers and researchers can now make an informed decision about which geometry type to choose during classification procedures to achieve the desired result. This study is focused on the structural components of a building i.e., the floors, ceilings, walls and beams as these form the core of any structure and are the target of numerous classification procedures.

The following conclusions are derived from the experiments on the Stanford 2D-3D-Semantics Dataset (2D-3D-S). In terms of feature extraction, the experiments show improved feature discriminativeness and distinctness for the mesh-based features due to the reduced amounts of noise and holes compared to point clouds. On average, mesh-based segments are larger, have fewer overlapping features and have a lower dispersion than their point cloud counterparts. However, the impact on the overall classification itself is minimal based on the observed F1 scores. Overall, both methods have promising results (75.9% for point clouds and 75.5% for meshes) despite the clutter in the scenes. This shows that machine learning models such as Bagged Trees are robust against high variance datasets that are building environments. The final experiment is a visual inspection, which revealed that both methods can deal with typical false positives such as doors and closets. Overall, it is concluded that the preprocessing of point clouds to mesh geometry leads to more distinct and discriminative features but not necessarily to a better classification. However, the significant data reduction which is achieved during the meshing allows for more complex features to be computed from the segments while maintaining performance. In procedures which cannot afford this preprocessing or to limit the serialization of potential break points in unsupervised workflows, methods using point cloud segments also offer promising classification results.

In terms of future work, there are several research topics that can be further investigated. For instance, an interesting field of research is the switch from human driven features to machine generated features much like the ones used in computer vision. Significant work also must be done to extend the generalization of building classification to make the method more robust to the variety of objects in the scene. The retrieval of windows and doors is also considered to be the next step in the classification procedure, which can use the structure information as prior knowledge.