Remote Sensing of Submerged Aquatic Vegetation in a Shallow Non-Turbid River Using an Unmanned Aerial Vehicle

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. General Approach

2.3. UAV Description

2.4. Image Sensor

2.5. Data Acquisition Missions

2.6. Ground-Based Verification of Remote Sensing Results

2.7. Geometric Correction

2.8. Georeferencing and Mosaicking

2.9. Image Analysis and Algal Mapping

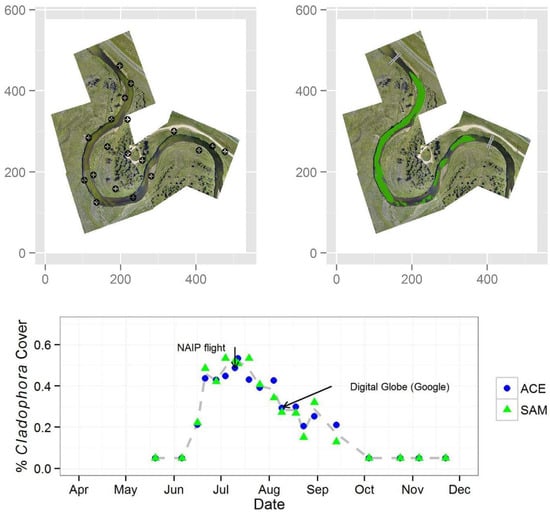

3. Results

| Attribute | Fitted Value | Uncertainty (±) a | ||

|---|---|---|---|---|

| X | Y | |||

| Focal length (pixels) | 1782.384 | 1782.453 | 3.257 | 3.277 |

| Principle point | 1967.203 | 1449.815 | 1.839 | 1.276 |

| Pixel error | 1.23959 | 1.25056 | --- | |

| Distortion | ||||

| k1 | −0.03606 | 0.00660 | ||

| k2 | 0.11243 | 0.02028 | ||

| k3 | −0.03936 | 0.02475 | ||

| k4 | 0.00198 | 0.01019 | ||

| μi | Band 1 (Red) | Band 2 (Green) | Band 3 (Blue) | |

|---|---|---|---|---|

| Band 1 (red) | 78.73604237543 | 276.1323309468 | 271.4320405892 | 224.5080967758 |

| Band 2 (green) | 78.76149526543 | 271.4320405892 | 291.3337301972 | 8.384664633906 |

| Band 3 (blue) | 48.16133256564 | 224.5080967758 | 8.384664633906 | 103.5271385886 |

| ACE (Threshold of 0.80) | SAM (Spectral Angle of 5°) | |||

|---|---|---|---|---|

| Cladophora | Background | Cladophora | Background | |

| Cladophora | 94,380 | 9057 | 94,904 | 6504 |

| Background | 13,769 | 120,907 | 13,245 | 123,460 |

| overall accuracy = 90%, Τ = 0.82 | overall accuracy = 92%, Τ = 0.84 | |||

| Date | Attribute | |||||

|---|---|---|---|---|---|---|

| % Cladophora Cover | Threshold | Streamflow (m3∙s−1) | SSC d (mg∙L−1) | |||

| ACE | SAM | ACE | SAM | |||

| 20 May 2013 | <0.05 a | <0.05 a | — | — | 3.91 | 10 |

| 6 June 2013 | <0.05 a | <0.05 a | — | — | 6.37 | 13 |

| 16 June 2013 | 0.21 | 0.22 | 0.80 | 2 | 6.46 | 16 (14) |

| 21 June 2013 | 0.44 | 0.48 | 0.80 | 3 | 5.24 | 7 |

| 28 June 2013 | 0.43 | 0.42 | 0.80 | 5 | 5.24 | 5 (2) |

| 4 July 2013 | 0.45 | 0.53 | 0.80 | 7 | 4.39 | 5 |

| 10 July 2013 b | 0.49 | 0.52 | 0.80 | 5 | 3.43 | 7 |

| 12 July 2013 | 0.53 | 0.51 | 0.80 | 3 | 3.40 | 25 (2) |

| 19 July 2013 | 0.43 | 0.53 | 0.80 | 3 | 2.75 | 11 |

| 26 July 2013 | 0.39 | 0.41 | 0.80 | 2.5 | 1.81 | 11 (<1) |

| 4 August 2013 | 0.43 | 0.34 | 0.80 | 2 | 2.41 | 23 |

| 9 August 2013 | 0.29 | 0.27 | 0.80 | 1.5 | 1.61 | 19 * (3) |

| 18 August 2013 c | 0.30 | 0.27 | 0.80 | 3 | 1.87 | 14 |

| 23 August 2013 | 0.20 | 0.15 | 0.80 | 3 | 1.67 | 33 * |

| 30 August 2013 | 0.25 | 0.32 | 0.80 | 1.5 | 2.15 | 21 |

| 13 September 2013 | 0.21 | 0.13 | 0.80 | 1 | 2.92 | 13 * |

| 4 October 2013 | <0.05 a | <0.05 a | — | — | 6.06 | (10) |

| 24 October 2013 | <0.05 a | <0.05 a | — | — | 6.03 | (11) |

| 5 November 2013 | <0.05 a | <0.05 a | — | — | 6.12 | — |

| 22 November 2013 | <0.05 a | <0.05 a | — | — | 5.58 | (31) |

4. Discussion

4.1. UAV Use in Freshwater Benthic Ecology

4.2. Understanding Cladophora Behavior through UAV Remote Sensing

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Dennison, W.C.; Orth, R.J.; Moore, K.A.; Stevenson, J.C.; Carter, V.; Kollar, S.; Bergstrom, P.W.; Batiuk, R.A. Assessing water quality with submersed aquatic vegetation. BioScience 1993, 43, 86–94. [Google Scholar] [CrossRef]

- Marcus, W.A.; Fonstad, M.A.; Legleiter, C.J. Management applications of optical remote sensing in the active river channel. In Fluvial Remote Sensing for Science and Management; Carbonneau, P.E., Piégay, H., Eds.; John Wiley & Sons, Ltd.: Chichester, UK, 2012; pp. 19–41. [Google Scholar]

- Carpenter, S.R.; Lodge, D.M. Effects of submersed macrophytes on ecosystem processes. Aquat. Bot. 1986, 26, 341–370. [Google Scholar] [CrossRef]

- Janauer, G.; Dokulil, M. Macrophytes and algae in running waters. In Biological Monitoring of Rivers; Ziglio, G., Siligardi, M., Flaim, G., Eds.; John Wiley & Sons, Ltd.: Chichester, UK, 2006; pp. 89–109. [Google Scholar]

- U.S. Environmental Protection Agency (USEPA). Submerged aquatic vegetation. In Volunteer Estuary Monitoring: A Methods Manual; Ohrel, R.L., Registar, K.M., Eds.; Ocean Conservancy: Washington, DC, USA, 2006; pp. 1–16. [Google Scholar]

- Duarte, C.M. Submerged aquatic vegetation in relation to different nutrient regimes. Ophelia 1995, 41, 87–112. [Google Scholar] [CrossRef]

- Torn, K.; Martin, G. Response of submerged aquatic vegetation to eutrophication-related environment descriptors in coastal waters of the NE Baltic Sea. Est. J. Ecol. 2012, 61, 106–118. [Google Scholar] [CrossRef]

- Blum, J.L. The ecology of river algae. Bot. Rev. 1956, 22, 291–341. [Google Scholar] [CrossRef]

- Whitton, B.A. Biology of Cladophora in freshwaters. Water Res. 1970, 4, 457–476. [Google Scholar] [CrossRef]

- Dodds, W.K.; Gudder, D.A. The ecology of Cladophora. J. Phycol. 1992, 28, 415–427. [Google Scholar] [CrossRef]

- Ensminger, I.; Hagen, C.; Braune, W. Strategies providing success in a variable habitat: I. Relationships of environmental factors and dominance of Cladophora glomerata. Plant Cell Environ. 2000, 23, 1119–1128. [Google Scholar] [CrossRef]

- Higgins, S.N.; Malkin, S.Y.; Todd Howell, E.; Guildford, S.J.; Campbell, L.; Hiriart-Baer, V.; Hecky, R.E. An ecological review of Cladophora glomerata (Chlorophyta) in the Lauerentian Great Lakes. J. Phycol. 2008, 44, 839–854. [Google Scholar] [CrossRef]

- Auer, M.T.; Tomlinson, L.M.; Higgins, S.N.; Malkin, S.Y.; Howell, E.T.; Bootsma, H.A. Great Lakes Cladophora in the 21st century: Same algae-different ecosystem. J. Great Lakes Res. 2010, 36, 248–255. [Google Scholar] [CrossRef]

- Freeman, M.C. The role of nitrogen and phosphorus in the development of Cladophora glomerata (L.) Kutzing in the Manawatu River, New Zealand. Hydrobiologia 1986, 131, 23–30. [Google Scholar] [CrossRef]

- Welch, E.B.; Jacoby, J.M.; Horner, R.R.; Seeley, M.R. Nuisance biomass levels of periphytic algae in streams. Hydrobiologia 1988, 157, 161–168. [Google Scholar] [CrossRef]

- Dodds, W.K.; Bouska, W.W.; Eitzmann, J.L.; Pilger, T.J.; Pitts, K.L.; Riley, A.J.; Schloesser, J.T.; Thornbrugh, D.J. Eutrophication of U.S. freshwaters: Analysis of potential economic damages. Environ. Sci. Technol. 2009, 43, 12–19. [Google Scholar] [CrossRef] [PubMed]

- Biggs, B.J.F.; Price, G.M. A survey of filamentous algal proliferations in New Zealand rivers. New Zeal. J. Mar. Fresh. 1987, 21, 175–191. [Google Scholar] [CrossRef]

- Wezernak, C.T.; Lyzenga, D.R. Analysis of Cladophora distribution in Lake Ontario using remote sensing. Remote Sens. Environ. 1975, 4, 37–48. [Google Scholar] [CrossRef]

- Lekan, J.F.; Coney, T.A. The use of remote sensing to map the areal distribution of Cladophora glomerata at a site in Lake Huron. J. Great Lakes Res. 1982, 8, 144–152. [Google Scholar] [CrossRef]

- Shuchman, R.A.; Sayers, M.J.; Brooks, C.N. Mapping and monitoring the extent of submerged aquatic vegetation in the Laurentian Great Lakes with multi-scale satellite remote sensing. J. Great Lakes Res. 2013, 39, 78–89. [Google Scholar] [CrossRef]

- Vahtmäe, E.; Kutser, T. Mapping bottom type and water depth in shallow coastal waters with satellite remote sensing. J. Coast. Res. 2007, 185–189. [Google Scholar]

- Zhu, B.; Fitzgerald, D.G.; Hoskins, S.B.; Rudstam, L.G.; Mayer, C.M.; Mills, E.L. Quantification of historical changes of submerged aquatic vegetation cover in two bays of Lake Ontario with three complementary methods. J. Great Lakes Res. 2007, 33, 122–135. [Google Scholar] [CrossRef]

- Depew, D.C.; Stevens, A.W.; Smith, R.E.; Hecky, R.E. Detection and characterization of benthic filamentous algal stands (Cladophora sp.) on rocky substrata using a high-frequency echosounder. Limnol. Oceanog-Meth. 2009, 7, 693–705. [Google Scholar] [CrossRef]

- Marcus, W.A.; Fonstad, M.A. Optical remote mapping of rivers at sub-meter resolutions and watershed extents. Earth Surf. Process. Landf. 2008, 33, 4–24. [Google Scholar] [CrossRef]

- Anker, Y.; Hershkovitz, Y.; Ben Dor, E.; Gasith, A. Application of aerial digital photography for macrophyte cover and composition survey in small rural streams. River Res. Applic. 2013, 30, 925–937. [Google Scholar] [CrossRef]

- Carbonneau, P.E.; Piégay, H. Fluvial Remote Sensing for Science and Management; John Wiley & Sons, Ltd: Chichester, UK, 2012; p. 440. [Google Scholar]

- Silva, T.; Costa, M.; Melack, J.; Novo, E. Remote sensing of aquatic vegetation: Theory and applications. Environ. Monit. Assess. 2008, 140, 131–145. [Google Scholar] [CrossRef] [PubMed]

- Marcus, W.A.; Fonstad, M.A. Remote sensing of rivers: The emergence of a subdiscipline in the river sciences. Earth Surf. Process. Landf. 2010, 35, 1867–1872. [Google Scholar] [CrossRef]

- Boike, J.; Yoshikawa, K. Mapping of periglacial geomorphology using kite/balloon aerial photography. Paermafrost Periglac. Process. 2003, 14, 81–85. [Google Scholar] [CrossRef] [Green Version]

- Planer-Friedrich, B.; Becker, J.; Brimer, B.; Merkel, B.J. Low-cost aerial photography for high-resolution mapping of hydrothermal areas in Yellowstone National Park. Int. J. Remote Sens. 2007, 29, 1781–1794. [Google Scholar] [CrossRef]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Mumby, P.J.; Green, E.P.; Edwards, A.J.; Clark, C.D. The cost-effectiveness of remote sensing for tropical coastal resources assessment and management. J. Environ. Manag. 1999, 55, 157–166. [Google Scholar] [CrossRef]

- Martin, J.; Edwards, H.H.; Burgess, M.A.; Percival, H.F.; Fagan, D.E.; Gardner, B.E.; Ortega-Ortiz, J.G.; Ifju, P.G.; Evers, B.S.; Rambo, T.J. Estimating distribution of hidden objects with drones: From tennis balls to manatees. PLoS One 2012, 7, 1–8. [Google Scholar] [CrossRef]

- Zang, W.; Lin, J.; Wang, Y.; Tao, H. Investigating small-scale water pollution with UAV remote sensing technology. In World Automation Congress (WAC); Institute of Electrical and Electronics Engineers (IEEE): Puerto Vallarta, Mexico, 2012; pp. 1–4. [Google Scholar]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Hardin, P.J.; Hardin, T.J. Small-scale remotely piloted vehicles in environmental research. Geog. Comp. 2010, 4, 1297–1311. [Google Scholar] [CrossRef]

- Carbonneau, P.E.; Piégay, H. Introduction: The growing use of imagery in fundamental and applied river sciences. In Fluvial Remote Sensing for Science and Management; Carbonneau, P.E., Piégay, H., Eds.; John Wiley & Sons, Ltd.: Chichester, UK, 2012; pp. 19–41. [Google Scholar]

- Hardin, P.J.; Jensen, R.R. Introduction—Small-scale unmanned aerial systems for environmental remote sensing. GISci. Remote Sens. 2011, 48, 1–3. [Google Scholar] [CrossRef]

- Federal Aviation Adminstration (FAA). Unmanned Aircraft Operations in the National Airspace System; Docket No. FAA-2006-25714; FAA: Washington, DC, USA, 2007.

- Gurtner, A.; Greer, D.G.; Glassock, R.; Mejias, L.; Walker, R.A.; Boles, W.W. Investigation of fish-eye lenses for small-UAV aerial photography. IEEE Trans. Geosci. Remote Sens. 2009, 47, 709–721. [Google Scholar] [CrossRef] [Green Version]

- Leighton, J. System Design of an Unmanned Aerial Vehicle (UAV) for Marine Environmental Sensing. Master’s Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2010. [Google Scholar]

- Strahler, A.N. Hypsometric (area-altitude) analysis of erosional topography. Geol. Soc. Am. Bull. 1952, 63, 1117–1142. [Google Scholar] [CrossRef]

- Lohman, K.; Priscu, J.C. Physiological indicators of nutrient deficiency in Cladophora (Chlorophyta) in the Clark Fork of the Colubmia River, Montana. J. Phycol. 1992, 28, 443–448. [Google Scholar] [CrossRef]

- Watson, V.; Gestring, B. Monitoring algae levels in the Clark Fork River. Intermt. J. Sci. 1996, 2, 17–26. [Google Scholar]

- Suplee, M.W.; Watson, V.; Dodds, W.K.; Shirley, C. Response of algal biomass to large-scale nutrient controls in the Clark Fork River, Montana, United States. J. Am. Water Resour. As. 2012, 48, 1008–1021. [Google Scholar] [CrossRef]

- Hardin, P.J.; Jensen, R.R. Small-scale unmanned aerial vehicles in environmental remote sensing: Challenges and opportunities. GISci. Remote Sens. 2011, 48, 99–111. [Google Scholar] [CrossRef]

- Edwards, T.K.; Glysson, G.D. Field methods for measurement of fluvial sediment, Book 3 Chapter C2. In Applications of Hydraulics; Department of the Interior, U.S. Geological Survey: Reston, VA, USA, 1999. [Google Scholar]

- American Public Health Association (APHA). Standard Methods for the Examination of Water and Wastewater; APHA: Washington, DC, USA, 1981.

- National Water Information System Data—World Wide Web (Water Data for the Nation). Available online: http://waterdata.usgs.gov/nwis/ (accessed on 5 November 2013).

- Fleming, E.A. Solar altitude nomograms. Photogramm. Eng. 1965, 31, 680–683. [Google Scholar]

- Mount, R. Acquisition of through-water aerial survey images: Surface effects and the prediction of sun glitter and subsurface illumination. Photogramm. Eng. Remote Sens. 2005, 71, 1407–1415. [Google Scholar] [CrossRef]

- Gardner, S.; (Federal Aviation Administration Unmanned Aircraft Systems Integration Office, Washington, DC, USA). Personal communication, 2013.

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vision Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- DJI Innovations, Inc. PHANTOM Quick Start Manual v1.6 2013.05.28 Revision for Naza-M Firmware v3.12 & Assistant Software v2.12. Available online: www.dji-innovations.com/ (accessed on 26 July 2013).

- Kannala, J.; Brandt, S.S. A generic camera model and calibration method for conventional, wide-angle, and fish-eye lenses. IEEE Trans. Pattern. Anal. Mach. Intell. 2006, 28, 1335–1340. [Google Scholar] [CrossRef] [PubMed]

- Bouguet, J.-Y. Camera Calibration toolbox for Matlab®; California Institute of Technology Computer Vision Research Group: Pasadena, CA, USA, 2013. [Google Scholar]

- Matlab R2007b, MathWorks, Inc.: Natick, MA, USA, 2013.

- ArcGIS 10.0 User’s Manual; ESRI (Environmental Systems Resource Institute): Redlands, CA, USA, 2013.

- Opticks 4.11.0, Ball Aerospace & Technologies Corp.: Boulder, CO, USA, 2013.

- Ma, Z.; Roland, R.L. Tau coefficients for accuracy assessment of classification of remote sensing data. Photogramm. Eng. Remote Sens. 1995, 62, 435–439. [Google Scholar]

- Mumby, P.J.; Green, E.P.; Edwards, A.J.; Clark, C.D. Coral reef habitat mapping: How much detail can remote sensing provide? Mar. Biol. 1997, 130, 193–202. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Kutser, T.; Vahtmäe, E.; Metsamaa, L. Spectral library of macroalgae and benthic substrates in Estonian coastal waters. Proc. Estonian Acad. Sci. Biol. Ecol 2006, 55, 329–340. [Google Scholar]

- Tanis, F.J. A Remote Sensing Technique to Monitor Cladophora in the Great Lakes; EPA-600/3-80-075; Environmental Protection Agency: Duluth, MN, USA, 1980.

- Hestir, E.L.; Khanna, S.; Andrew, M.E.; Santos, M.J.; Viers, J.H.; Greenberg, J.A.; Rajapakse, S.S.; Ustin, S.L. Identification of invasive vegetation using hyperspectral remote sensing in the California Delta ecosystem. Remote Sens. Environ. 2008, 112, 4034–4047. [Google Scholar] [CrossRef]

- Biggs, B.J.F. Patterns in benthic algae of streams. In Algal Ecology: Freshwater Bethic Ecosystems; Stevenson, R.J., Bothwell, M.L., Lowe, R.L., Eds.; Academic Press: San Diego, CA, USA, 1996; pp. 31–56. [Google Scholar]

- Biggs, B.J.F. Hydraulic habitat of plants in streams. Regul. River. 1996, 12, 131–144. [Google Scholar] [CrossRef]

- Biggs, B.J.F.; Stokseth, S. Hydraulic habitat suitability for periphyton in rivers. Regul. River. 1996, 12, 251–261. [Google Scholar] [CrossRef]

- Flinders, C.A.; Hart, D.D. Effects of pulsed flows on nuisance periphyton growths in rivers: A mesocosm study. River Res. Applic. 2009, 25, 1320–1330. [Google Scholar] [CrossRef]

- Biggs, B.J.F.; Thomsen, H.A. Disturbance of stream periphyton by perturbations in shear stress: Time to structural failure and differences in community resistance. J. Phycol. 1995, 31, 233–241. [Google Scholar] [CrossRef]

- Luce, J.J.; Cattaneo, A.; Lapointe, M.F. Spatial patterns in periphyton biomass after low-magnitude flow spates: Geomorphic factors affecting patchiness across Gravel–Cobble Riffles. J. N. Am. Benthol. Soc. 2010, 29, 614–626. [Google Scholar] [CrossRef]

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Flynn, K.F.; Chapra, S.C. Remote Sensing of Submerged Aquatic Vegetation in a Shallow Non-Turbid River Using an Unmanned Aerial Vehicle. Remote Sens. 2014, 6, 12815-12836. https://0-doi-org.brum.beds.ac.uk/10.3390/rs61212815

Flynn KF, Chapra SC. Remote Sensing of Submerged Aquatic Vegetation in a Shallow Non-Turbid River Using an Unmanned Aerial Vehicle. Remote Sensing. 2014; 6(12):12815-12836. https://0-doi-org.brum.beds.ac.uk/10.3390/rs61212815

Chicago/Turabian StyleFlynn, Kyle F., and Steven C. Chapra. 2014. "Remote Sensing of Submerged Aquatic Vegetation in a Shallow Non-Turbid River Using an Unmanned Aerial Vehicle" Remote Sensing 6, no. 12: 12815-12836. https://0-doi-org.brum.beds.ac.uk/10.3390/rs61212815