Luminance-Corrected 3D Point Clouds for Road and Street Environments

Abstract

:1. Introduction

2. Study Area and Data Acquisition

2.1. Test Site

2.2. 3D Point Cloud

2.3. Image Acquisition

Luminance Calibration

- K is the calibration constants

- Nd is the digital value Y of the pixel in the raw image obtained with equation 2,

- fs is the aperture,

- Ls is the luminance of the scene in cd/m2

- t is the exposure time in seconds and

- SISO is the ISO value.

3. Integration of Luminance Values and 3D Point Cloud

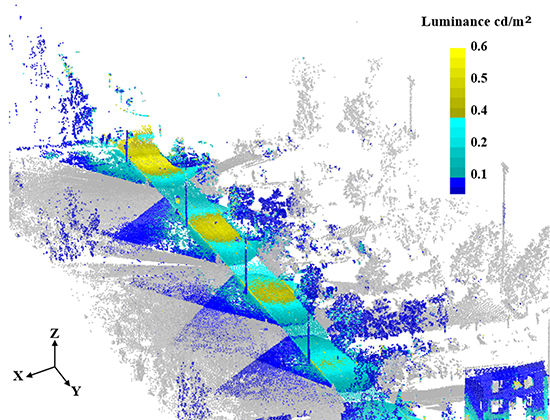

3.1. 3D Luminance Point Cloud

3.2. Statistical Analysis of Road Surface Luminance

| Lamp | Direction (/Position) | Lm, Left Lane (cd/m2) | Lm, Right Lane (cd/m2) | Uo (%) | Ul, Left Lane (%) | Ul, Right Lane (%) |

|---|---|---|---|---|---|---|

| L2 | N (P1) | 0.211 | 0.221 | 0.425 | 0.286 | 0.267 |

| S (P2) | 0.206 | 0.214 | 0.433 | 0.385 | 0.286 | |

| L3 | N (P2) | 0.316 | 0.307 | 0.446 | 0.368 | 0.389 |

| S (P3) | 0.298 | 0.280 | 0.396 | 0.389 | 0.353 | |

| L4 | N (P3) | 0.383 | 0.369 | 0.495 | 0.478 | 0.500 |

| S (P4) | 0.346 | 0.346 | 0.399 | 0.318 | 0.364 |

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Kostic, M.; Djokic, L. Recommendations for energy efficient and visually acceptable street lighting. Energy 2009, 34, 1565–1572. [Google Scholar] [CrossRef]

- EC 245/2009. COMMISSION REGULATION (EC) No 245/2009 of 18 March 2009 implementing Directive 2005/32/EC of the European Parliament and of the Council with regard to ecodesign requirements for fluorescent lamps without integrated ballast, for high intensity discharge lamps, and for ballasts and luminaires able to operate such lamps, and repealing Directive 2000/55/EC of the European Parliament and of the Council. Available online: http://eur-lex.europa.eu/LexUriServ/LexUriServ.do?uri=OJ:L:2009:076:0017:0044:en:PDF (accessed on 2 September 2015).

- Kostic, M.; Djokic, L.; Pojatar, D.; Strbac-Hadzibegovic, N. Technical and economic analysis of road lighting solutions based on mesopic vision. Build. Environ. 2009, 44, 66–75. [Google Scholar] [CrossRef]

- Cengiz, C.; Kotkanen, H.; Puolakka, M.; Lappi, O.; Lehtonen, E.; Halonen, L.; Summala, H. Combined eye-tracking and luminance measurements while driving on a rural road: Towards determining mesopic adaptation luminance. Light. Res. Technol. 2013. [Google Scholar] [CrossRef]

- Ekrias, A.; Eloholma, M.; Halonen, L.; Song, X.J.; Zhang, X.; Wen, Y. Road lighting and headlights: Luminance measurements and automobile lighting simulations. Build. Environ. 2008, 43, 530–536. [Google Scholar] [CrossRef]

- Zhou, H.; Pirinccioglu, F.; Hsu, P. A new roadway lighting measurement system. Transp. Res. Part C Emerg. Technol. 2009, 17, 274–284. [Google Scholar] [CrossRef]

- Manandhar, D.; Shibasaki, R. Auto-extraction of urban features from vehicle-borne laser data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, 34, 650–655. [Google Scholar]

- Jochem, A.; Höfle, B.; Rutzinger, M. Extraction of vertical walls from mobile laser scanning data for solar potential assessment. Remote Sens. 2011, 3, 650–667. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Hyyppä, H.; Kukko, A. Retrieval algorithms for road surface modelling using Laser-based mobile mapping. Sensors 2008, 8, 5238–5249. [Google Scholar] [CrossRef]

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Automatic detection of road tunnel luminaires using a mobile LiDAR system. Measurement 2014, 47, 569–575. [Google Scholar] [CrossRef]

- Lehtomäki, M.; Jaakkola, A.; Hyyppä, J.; Kukko, A.; Kaartinen, H. Detection of vertical pole-like objects in a road environment using vehicle-based laser scanning data. Remote Sens. 2010, 2, 641–664. [Google Scholar] [CrossRef]

- Rutzinger, M.; Pratihast, A.K.; Elberink, S.O.; Vosselman, G. Detection and modeling of 3D trees from mobile laser scanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, 520–525. [Google Scholar]

- Yang, B.; Wei, Z.; Li, Q.; Li, J. Automated extraction of street-scene objects from mobile Lidar point clouds. Int. J. Remote Sens. 2012, 33, 5839–5861. [Google Scholar] [CrossRef]

- Arastounia, M.; Elberink, S.O.; Khoshelham, K.; Diaz Benito, D. Rail track detection and modeling in mobile laser scanner data. ISPRS Ann. Photogram. Remote Sens. Spat. Inform. Sci. 2013, 5, 223–228. [Google Scholar]

- Wu, B.; Yu, B.; Yue, W.; Shu, S.; Tan, W.; Hu, C.; Huang, Y.; Wu, J.; Liu, H. A voxel-based method for automated identification and morphological parameters estimation of individual street trees from mobile laser scanning data. Remote Sens. 2013, 5, 584–611. [Google Scholar] [CrossRef]

- Rönnholm, P.; Honkavaara, E.; Litkey, P.; Hyyppä, H.; Hyyppä, J. Integration of laser scanning and photogrammetry (Key-note). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2007, 36, 355–362. [Google Scholar]

- Alba, M.I.; Barazzetti, L.; Scaioni, M.; Rosina, E.; Previtali, M. Mapping infrared data on terrestrial laser scanning 3D models of buildings. Remote Sens. 2011, 3, 1847–1870. [Google Scholar] [CrossRef]

- González-Aguilera, D.; Rodriguez-Gonzalvez, P.; Armesto, J.; Lagüela, S. Novel approach to 3D thermography and energy efficiency evaluation. Energy Build. 2012, 54, 436–443. [Google Scholar] [CrossRef]

- Lubowiecka, I.; Arias, P.; Riveiro, B.; Solla, M. Multidisciplinary approach to the assessment of historic structures based on the case of a masonry bridge in Galicia (Spain). Comput. Struct. 2011, 89, 1615–1627. [Google Scholar] [CrossRef]

- Zhu, L.; Hyyppä, J.; Kukko, A.; Jaakkola, A.; Lehtomäki, M.; Kaartinen, H.; Chen, R.; Pei, L.; Chen, Y.; Hyyppä, H.; Rönnholm, P.; Haggren, H. 3D city model for mobile phone using MMS data. In Proceedings of the Urban Remote Sensing Joint Event, Shanghai, China, 20–22 May 2009.

- Alamús, R.; Kornus, W. DMC geometry analysis and virtual image characterisation. Photogramm. Record 2008, 23, 353–371. [Google Scholar] [CrossRef]

- Brenner, C.; Haala, N. Rapid acquisition of virtual reality city models from multiple data sources. Int. Arch. Photogramm. Remot. Sens. 1998, 32, 323–330. [Google Scholar]

- Virtanen, J.-P.; Puustinen, T.; Pennanen, K.; Vaaja, M.T.; Kurkela, M.; Viitanen, K.; Rönnholm, P. Customized visualizations of urban infill development scenarios for local stakeholders. J. Build. Constr. Plan. Res. 2015, 3, 68–81. [Google Scholar] [CrossRef]

- Kanzok, T.; Linsen, L.; Rosenthal, P. An interactive visualization system for huge architectural Laser Scans. In Proceedings of the 10th International Conference on Computer Graphics Theory and Applications, Berlin, Germany, 11–14 March 2015; pp. 265–273.

- Chow, J.; Lichti, D.; Teskey, W. Accuracy assessment of the Faro Focus3D and Leica HDS6100 panoramic type terrestrial laser scanner through point-based and plane-based user self-calibration. In Proceedings of the FIG Working Week: Knowing to Manage the Territory, Protect the Environment, Evaluate the Cultural Heritage, Rome, Italy, 6–10 May 2012.

- Meyer, J.E.; Gibbons, R.B.; Edvards, C.J. Development and Validation of a Luminance Camera, Final Report; Virginia Tech Transportation Institute: Blacksburg, VA, USA, 2009. [Google Scholar]

- Wüller, D.; Gabele, H. The usage of digital cameras as luminance meters. Proc. SPIE 2007. [Google Scholar] [CrossRef]

- Hiscocks, P.D.; Eng, P. Measuring Luminance with a Digital Camera: Case History. Available online: http://www.ee.ryerson.ca:8080/~phiscock/astronomy/light-pollution/luminance-case-history.pdf (accessed on 2 September 2015).

- Fryer, J.G.; Brown, D.C. Lens distortion for close-range photogrammetry. Photogramm. Eng. Remote Sens. 1986, 52, 51–58. [Google Scholar]

- CIE 1932. In Commission Internationale de l'Eclairage Proceedings; Cambridge University Press: Cambridge, UK, 1931.

- CIE 1926. In Commission Internationale de l'Eclairage Proceedings; Cambridge University Press: Cambridge, UK, 1924.

- IEC 61966-2-1. In Multimedia Systems and Equipment—Colour Measurements and Management—Part 2-1: Colour Management—Default RGB Color Space—sRGB; International Electrotechnical Commission: Geneva, Switzerland, 1999.

- EN 13201. European Standard EN 13201-2. Road lighting. Part 2: Performance Requirements. Available online: https://www.fer.unizg.hr/_download/repository/en_13201-2_.pdf (accessed on 2 September 2015).

- Kanzok, T.; Linsen, L.; Rosenthal, P. On-the-fly luminance correction for rendering of inconsistently lit point clouds. J. WSCG 2012, 20, 161–169. [Google Scholar]

- Güler, Ö.; Onaygil, S. The effect of luminance uniformity on visibility level in road lighting. Light. Res. Technol. 2003, 35, 199–213. [Google Scholar] [CrossRef]

- Kaartinen, H.; Hyyppä, J.; Kukko, A.; Jaakkola, A.; Hyyppä, H. Benchmarking the performance of mobile laser scanning systems using a permanent test field. Sensors 2012, 12, 12814–12835. [Google Scholar] [CrossRef]

- Cai, H.; Li, L. Measuring light and geometry data of roadway environments with a camera. J. Transp. Technol. 2014, 4, 44–62. [Google Scholar] [CrossRef]

- Commission Internationale de l’Eclairage. Recommended System for Mesopic Photometry Based on Visual Performance; CIE Publication 191: Vienna, Austria, 2010; p. 79. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Vaaja, M.T.; Kurkela, M.; Virtanen, J.-P.; Maksimainen, M.; Hyyppä, H.; Hyyppä, J.; Tetri, E. Luminance-Corrected 3D Point Clouds for Road and Street Environments. Remote Sens. 2015, 7, 11389-11402. https://0-doi-org.brum.beds.ac.uk/10.3390/rs70911389

Vaaja MT, Kurkela M, Virtanen J-P, Maksimainen M, Hyyppä H, Hyyppä J, Tetri E. Luminance-Corrected 3D Point Clouds for Road and Street Environments. Remote Sensing. 2015; 7(9):11389-11402. https://0-doi-org.brum.beds.ac.uk/10.3390/rs70911389

Chicago/Turabian StyleVaaja, Matti T., Matti Kurkela, Juho-Pekka Virtanen, Mikko Maksimainen, Hannu Hyyppä, Juha Hyyppä, and Eino Tetri. 2015. "Luminance-Corrected 3D Point Clouds for Road and Street Environments" Remote Sensing 7, no. 9: 11389-11402. https://0-doi-org.brum.beds.ac.uk/10.3390/rs70911389