Mapping Complex Urban Land Cover from Spaceborne Imagery: The Influence of Spatial Resolution, Spectral Band Set and Classification Approach

Abstract

:1. Introduction

1.1. VHR Sensors

1.2. Enhanced Spectral Capabilities

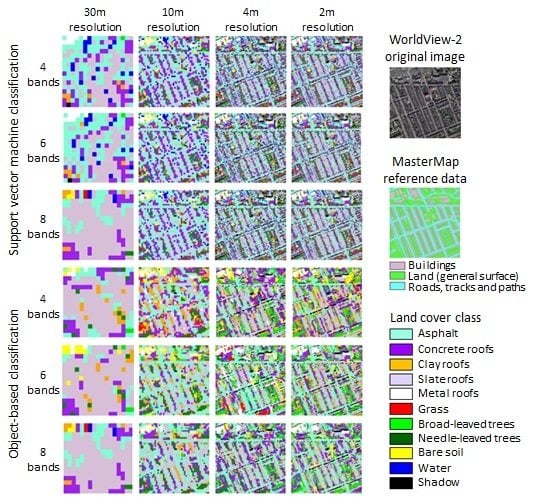

1.3. Pixel-Based versus Object-Based Classification

1.4. Mapping Complex Urban Land Cover

2. Research Materials

2.1. Study Area and Classification Schema

| Class | Description |

|---|---|

| Asphalt | Urban ground surfaces covered in asphalt such as roads and car parks |

| Concrete roofs | Predominantly residential buildings covered in dark grey concrete tiles |

| Clay roofs | Predominantly residential buildings covered in red clay tiles |

| Slate roofs | Predominantly residential buildings covered in light grey slate tiles |

| Metal roofs | Predominantly industrial buildings covered in white metal panels |

| Grass | Areas of grassland such as urban parks and lawns, plus surrounding rural agriculture |

| Broad-leaved trees | Patches of deciduous broad-leaved trees |

| Needle-leaved trees | Patches of evergreen needle-leaved trees |

| Bare soil | Open areas covered by bare soil |

| Water | Water bodies including lakes, rivers, ponds and canals |

| Shadow | Areas of shadow cast from tall structures such as buildings and trees |

2.2. Image and Reference Data

3. Research Methods

- Maximum likelihood classification: a parametric pixel-based approach;

- Support vector machine classification: a non-parametric pixel-based approach; and

- Object-based classification.

3.1. Pixel-Based Class Training

3.2. Pixel-Based Classification

3.3. Object-Based Classification

| Input Image Spatial Resolution (m) | Stage 1. Separation of Vegetation and Non-Vegetation | Stage 2a. Identification of Individual Vegetation Classes | Stage 2b. Identification of Individual Non-Vegetation Classes | |||

|---|---|---|---|---|---|---|

| Segmentation Parameters (Scale, Shape, Compactness) | Spectral Merging Threshold | Segmentation Parameters (Scale, Shape, Compactness) | Spectral Merging Threshold | Segmentation Parameters (Scale, Shape, Compactness) | Spectral Merging Threshold | |

| 30 | 6, 0.3, 0.8 | NA | 7, 0.3, 0.8 | NA | 5, 0.3, 0.8 | NA |

| 10 | 10, 0.3, 0.8 | NA | 12, 0.3, 0.8 | NA | 6, 0.3, 0.8 | NA |

| 4 | 20, 0.3, 0.8 | NA | 30, 0.3, 0.8 | NA | 12, 0.3, 0.8 | NA |

| 2 | 25, 0.3, 0.8 | 20 | 35, 0.3, 0.8 | 35 | 17, 0.3, 0.8 | 10 |

3.4. Accuracy Assessment and Statistical Testing

4. Results

| Reference Class | Users Accuracy | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Asphalt | Concrete Roofs | Clay Roofs | Metal Roofs | Slate Roofs | Grass | Broad-leaved Trees | Needle-leaved trees | Bare Soil | Water | Shadow | |||

| Predicted Class | Asphalt | 37 | 1 | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 93% |

| Concrete roofs | 4 | 38 | 4 | 0 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 78% | |

| Clay roofs | 0 | 1 | 34 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 94% | |

| Metal roofs | 0 | 0 | 0 | 39 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 100% | |

| Slate roofs | 1 | 1 | 0 | 0 | 35 | 0 | 0 | 0 | 0 | 0 | 0 | 95% | |

| Grass | 1 | 0 | 0 | 0 | 0 | 36 | 0 | 0 | 1 | 0 | 1 | 92% | |

| Broad-leaved trees | 0 | 0 | 0 | 0 | 0 | 5 | 40 | 8 | 0 | 0 | 0 | 75% | |

| Needle-leaved trees | 0 | 0 | 0 | 0 | 0 | 0 | 2 | 30 | 0 | 0 | 0 | 94% | |

| Bare soil | 0 | 0 | 1 | 1 | 0 | 0 | 0 | 0 | 29 | 0 | 0 | 94% | |

| Water | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 43 | 0 | 100% | |

| Shadows | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 39 | 100% | |

| Producers accuracy | 86% | 93% | 85% | 98% | 88% | 88% | 95% | 79% | 97% | 100% | 98% | ||

| Overall classification accuracy = 91% | |||||||||||||

| Reference Class | Users Accuracy | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Asphalt | Concrete Roofs | Clay Roofs | Metal Roofs | Slate Roofs | Grass | Broad-leaved Trees | Needle-leaved Trees | Bare Soil | Water | Shadow | |||

| Predicted Class | Asphalt | 14 | 9 | 2 | 5 | 7 | 2 | 7 | 0 | 3 | 6 | 12 | 23% |

| Concrete roofs | 16 | 18 | 15 | 1 | 11 | 0 | 4 | 1 | 2 | 3 | 7 | 33% | |

| Clay roofs | 6 | 6 | 18 | 2 | 6 | 5 | 4 | 3 | 0 | 0 | 5 | 71% | |

| Metal roofs | 0 | 0 | 0 | 25 | 2 | 0 | 0 | 0 | 2 | 0 | 6 | 57% | |

| Slate roofs | 3 | 3 | 1 | 0 | 12 | 0 | 0 | 0 | 0 | 0 | 2 | 44% | |

| Grass | 0 | 1 | 0 | 0 | 0 | 12 | 7 | 5 | 2 | 0 | 0 | 26% | |

| Broad-leaved trees | 0 | 2 | 1 | 0 | 1 | 12 | 13 | 16 | 3 | 1 | 1 | 15% | |

| Needle-leaved trees | 0 | 0 | 0 | 0 | 0 | 1 | 4 | 2 | 0 | 5 | 1 | 32% | |

| Bare soil | 3 | 3 | 1 | 7 | 0 | 6 | 1 | 9 | 16 | 2 | 2 | 76% | |

| Water | 0 | 1 | 0 | 0 | 0 | 2 | 0 | 0 | 0 | 22 | 4 | 0% | |

| Shadows | 0 | 0 | 2 | 0 | 2 | 0 | 2 | 1 | 2 | 3 | 0 | 23% | |

| Producers accuracy | 33% | 42% | 45% | 63% | 29% | 30% | 31% | 5% | 53% | 52% | 0% | ||

| Overall classification accuracy = 35% | |||||||||||||

5. Discussion

5.1. The Key Role of Spatial Resolution

5.2. Spectral Data Dimensionality

5.3. Classifier Choice

5.4. Project Requirements versus Project Resources

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- Zhou, Y.H.; Qiu, F. Fusion of high spatial resolution WorldView-2 imagery and LIDAR pseudo-waveform for object-based image analysis. ISPRS J. Photogramm. Remote Sens. 2015, 101, 221–232. [Google Scholar] [CrossRef]

- Xu, H.Q. Rule-based impervious surface mapping using high spatial resolution imagery. Int. J. Remote Sens. 2013, 34, 27–44. [Google Scholar] [CrossRef]

- Herold, M.; Gardner, M.E.; Roberts, D.A. Spectral resolution requirements for mapping urban areas. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1907–1919. [Google Scholar] [CrossRef]

- Lu, D.S.; Hetrick, S.; Moran, E. Land cover classification in a complex urban-rural landscape with QuickBird imagery. Photogramm. Eng. Remote Sens. 2010, 76, 1159–1168. [Google Scholar] [CrossRef]

- Moreira, R.C.; Galvão, L.S. Variation in spectral shape of urban materials. Remote Sens. Lett. 2010, 1, 149–158. [Google Scholar] [CrossRef]

- Guindon, B.; Zhang, Y.; Dillabaugh, C. Landsat urban mapping based on a combined spectral-spatial methodology. Remote Sens. Environ. 2004, 92, 218–232. [Google Scholar] [CrossRef]

- Small, C.; Lu, J.W.T. Estimation and vicarious validation of urban vegetation abundance by spectral mixture analysis. Remote Sens. Environ. 2006, 100, 441–456. [Google Scholar] [CrossRef]

- Lu, D.S.; Hetrick, S.; Moran, E. Impervious surface mapping with QuickBird imagery. Int. J. Remote Sens. 2011, 32, 2519–2533. [Google Scholar] [CrossRef] [PubMed]

- Ling, F.; Li, X.; Xiao, F.; Fang, S.; Du, Y. Object-based sub-pixel mapping of buildings incorporating the prior shape information from remotely sensed imagery. Int. J. Appl. Earth Obs. Geoinform. 2012, 18, 283–292. [Google Scholar] [CrossRef]

- Zhang, J.; Li, P.; Wang, J. Urban built-up area extraction from Landsat TM/ETM+ images using spectral information and multivariate texture. Remote Sens. 2014, 6, 7339–7359. [Google Scholar] [CrossRef]

- Aplin, P. Remote sensing: Base mapping. Prog. Phys. Geogr. 2003, 27, 275–283. [Google Scholar] [CrossRef]

- Inglada, J.; Michel, J. Qualitative spatial reasoning for high-resolution remote sensing image analysis. IEEE Trans. Geosci. Remote Sens. 2009, 47, 599–612. [Google Scholar] [CrossRef] [Green Version]

- Weng, Q. Remote sensing of impervious surfaces in the urban areas: Requirements, methods, and trends. Remote Sens. Environ. 2012, 117, 34–49. [Google Scholar] [CrossRef]

- Qin, Y.C.; Li, S.H.; Vu, T.T.; Niu, Z.; Ban, Y.F. Synergistic application of geometric and radiometric features of LIDAR data for urban land cover mapping. Opt. Express 2015, 23, 13761–13775. [Google Scholar] [CrossRef] [PubMed]

- Tuia, D.; Pacifici, F.; Kanevski, M.; Emery, W.J. Classification of very high spatial resolution imagery using mathematical morphology and support vector machines. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3866–3879. [Google Scholar] [CrossRef]

- Fiumi, L. Surveying the roofs of Rome. J. Cult. Herit. 2012, 13, 304–313. [Google Scholar] [CrossRef]

- Poursanidis, D.; Chrysoulakis, N.; Mitraka, Z. Landsat 8 vs. Landsat 5: A comparison based on urban and peri-urban land cover mapping. Int. J. Appl. Earth Obs. Geoinform. 2015, 35, 259–269. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic object-based image analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Lu, D.S.; Weng, Q.H. Spectral mixture analysis of the urban landscape in Indianapolis with Landsat ETM plus imagery. Photogramm. Eng. Remote Sens. 2004, 70, 1053–1062. [Google Scholar] [CrossRef]

- Myint, S.W.; Gober, P.; Brazel, A.; Grossman-Clarke, S.; Weng, Q.H. Per-pixel vs. object-based classification of urban land cover extraction using high spatial resolution imagery. Remote Sens. Environ. 2011, 115, 1145–1161. [Google Scholar] [CrossRef]

- Campbell, J.B. Introduction to Remote Sensing, 4th ed.; Guilford Press: New York, NY, USA, 2007. [Google Scholar]

- Aplin, P.; Smith, G.M. Introduction to object-based landscape analysis. Int. J. Geogr. Inf. Sci. 2011, 25, 869–875. [Google Scholar] [CrossRef]

- Liu, J.J.; Shi, L.S.; Zhang, C.; Yang, J.Y.; Zhu, D.H.; Yang, J.H. A variable multi-scale segmentation method for spatial pattern analysis using multispectral WorldView-2 images. Sensor Lett. 2013, 11, 1055–1061. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.P. An SVM ensemble approach combining spectral, structural, and semantic features for the classification of high-resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 257–272. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Myint, S.W.; Mesev, V. A comparative analysis of spatial indices and wavelet-based classification. Remote Sens. Lett. 2012, 3, 141–150. [Google Scholar] [CrossRef]

- Zhou, W.Q.; Troy, A.; Grove, M. Object-based land cover classification and change analysis in the Baltimore metropolitan area using multitemporal high resolution remote sensing data. Sensors 2008, 8, 1613–1636. [Google Scholar] [CrossRef]

- Li, X.X.; Shao, G.F. Object-based land-cover mapping with high resolution aerial photography at a county scale in midwestern USA. Remote Sens. 2014, 6, 11372–11390. [Google Scholar] [CrossRef]

- Walker, J.S.; Blaschke, T. Object-based land-cover classification for the Phoenix metropolitan area: Optimization vs. transportability. Int. J. Remote Sens. 2008, 29, 2021–2040. [Google Scholar] [CrossRef]

- Bhaskaran, S.; Paramananda, S.; Ramnarayan, M. Per-pixel and object-oriented classification methods for mapping urban features using IKONOS satellite data. Appl. Geogr. 2010, 30, 650–665. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Weng, Q.; Resch, B. Collective sensing: Integrating geospatial technologies to understand urban systems—An overview. Remote Sens. 2011, 3, 1743–1776. [Google Scholar] [CrossRef]

- Baker, B.A.; Warner, T.A.; Conley, J.F.; McNeil, B.E. Does spatial resolution matter? A multi-scale comparison of object-based and pixel-based methods for detecting change associated with gas well drilling operations. Int. J. Remote Sens. 2013, 34, 1633–1651. [Google Scholar] [CrossRef]

- Jebur, M.N.; Shafri, H.Z.M.; Pradhan, B.; Tehrany, M.S. Per-pixel and object-oriented classification methods for mapping urban land cover extraction using SPOT 5 imagery. Geocarto Int. 2014, 29, 792–806. [Google Scholar] [CrossRef]

- Bouziani, M.; Goita, K.; He, D.C. Rule-based classification of a very high resolution image in an urban environment using multispectral segmentation guided by cartographic data. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3198–3211. [Google Scholar] [CrossRef]

- Ziaei, Z.; Pradhan, B.; Bin Mansor, S. A rule-based parameter aided with object-based classification approach for extraction of building and roads from WorldView-2 images. Geocarto Int. 2014, 29, 554–569. [Google Scholar] [CrossRef]

- Hamedianfar, A.; Shafri, H.Z.M.; Mansor, S.; Ahmad, N. Improving detailed rule-based feature extraction of urban areas from WorldView-2 image and LIDAR data. Int. J. Remote Sens. 2014, 35, 1876–1899. [Google Scholar] [CrossRef]

- Aplin, P. Comparison of simulated IKONOS and SPOT HRV imagery for classifying urban areas. In Remotely-Sensed Cities; Mesev, V., Ed.; Taylor and Francis: London, UK, 2003; pp. 23–45. [Google Scholar]

- Nomis Official Labour Market Statistics. Available online: http://www.nomisweb.co.uk/census/2011/KS101EW/view/1946157131?cols=measures (accessed on 24 September 2015).

- Hamedianfar, A.; Shafri, H.Z.M. Detailed intra-urban mapping through transferable OBIA rule sets using WorldView-2 very-high-resolution satellite images. Int. J. Remote Sens. 2015, 36, 3380–3396. [Google Scholar] [CrossRef]

- Zhou, X.; Jancsó, T.; Chen, C.; Malgorzata, W. Urban land cover mapping based on object oriented classification using WorldView-2 satellite remote sensing images. In Proceedings of the International Scientific Conference on Sustainable Development and Ecological Footprint, Sopron, Hungary, 26–27 March 2012.

- Gu, Y.F.; Wang, Q.W.; Jia, X.P.; Benediktsson, J.A. A novel MKL model of integrating LIDAR data and MSI for urban area classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 5312–5326. [Google Scholar]

- Tsutsumida, N.; Comber, A.J. Measures of spatio-temporal accuracy for time series land cover data. Int. J. Appl. Earth Obs. Geoinform. 2015, 41, 46–55. [Google Scholar] [CrossRef]

- OS MasterMap Topgraphy Layer. Available online: https://www.ordnancesurvey.co.uk/business-and-government/products/topography-layer.html (accessed on 24 September 2015).

- Mather, P.M. Computer Processing of Remotely Sensed Images: An Introduction, 2nd ed.; Wiley: Chichester, UK, 1999. [Google Scholar]

- Holland, J.; Aplin, P. Super-resolution image analysis as a means of monitoring bracken (pteridium aquilinum) distributions. ISPRS J. Photogramm. Remote Sens. 2013, 75, 48–63. [Google Scholar] [CrossRef]

- McCoy, R.M. Field Methods in Remote Sensing; Guilford Press: New York, NY, USA, 2005. [Google Scholar]

- Richards, J.; Jia, X. Remote Sensing Digital Image Analysis: An Introduction; Springer: New York, NY, USA, 1999. [Google Scholar]

- Song, M.; Civco, D.L.; Hurd, J.D. A competitive pixel-object approach for land cover classification. Int. J. Remote Sens. 2005, 26, 4981–4997. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S.; Yu, Q. Object-based urban detailed land cover classification with high spatial resolution IKONOS imagery. Int. J. Remote Sens. 2011, 32, 3285–3308. [Google Scholar] [CrossRef]

- Mather, P.M.; Koch, M. Computer Processing of Remotely-Sensed Images: An Introduction, 4th ed.; Wiley-Blackwell: Chichester, UK, 2011. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Huang, C.; Davis, L.S.; Townshend, J.R.G. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Boyd, D.S.; Sanchez-Hernandez, C.; Foody, G.M. Mapping a specific class for priority habitats monitoring from satellite sensor data. Int. J. Remote Sens. 2006, 27, 2631–2644. [Google Scholar] [CrossRef]

- Otukei, J.R.; Blaschke, T. Land cover change assessment using decision trees, support vector machines and maximum likelihood classification algorithms. Int. J. Appl. Earth Obs. Geoinform. 2010, 12, S27–S31. [Google Scholar] [CrossRef]

- Chi, M.M.; Feng, R.; Bruzzone, L. Classification of hyperspectral remote-sensing data with primal SVM for small-sized training dataset problem. Adv. Sp. Res. 2008, 41, 1793–1799. [Google Scholar] [CrossRef]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent advances in techniques for hyperspectral image processing. Remote Sens. Environ. 2009, 113, S110–S122. [Google Scholar] [CrossRef]

- Pal, M.; Foody, G.M. Feature selection for classification of hyperspectral data by SVM. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2297–2307. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Assessment of the effectiveness of support vector machines for hyperspectral data. Future Gener. Comput. Syst. 2004, 20, 1215–1225. [Google Scholar] [CrossRef]

- Dixon, B.; Candade, N. Multispectral landuse classification using neural networks and support vector machines: One or the other, or both? Int. J. Remote Sens. 2008, 29, 1185–1206. [Google Scholar] [CrossRef]

- Exelis Visual Information Solutions. Available online: http://www.exelisvis.co.uk/ProductsServices/ENVIProducts.aspx (accessed on 24 September 2015).

- Keerthi, S.S.; Lin, C.J. Asymptotic behaviors of support vector machines with Gaussian kernel. Neural Comput. 2003, 15, 1667–1689. [Google Scholar] [CrossRef] [PubMed]

- Marjanovic, M.; Kovacevic, M.; Bajat, B.; Vozenilek, V. Landslide susceptibility assessment using SVM machine learning algorithm. Eng. Geol. 2011, 123, 225–234. [Google Scholar] [CrossRef]

- Wang, P.; Wang, Y.S. Malware behavioural detection and vaccine development by using a support vector model classifier. J. Comput. Syst. Sci. 2015, 81, 1012–1026. [Google Scholar] [CrossRef]

- Scikit Learn. Available online: http://scikit-learn.org/stable/modules/svm.html#svm (accessed on 24 September 2015).

- eCognition Suite Version 9.1. Available online: http://www.ecognition.com/ (accessed on 24 September 2015).

- Wezyka, P.; Hawryloa, P.; Szostaka, M.; Pierzchalskib, M.; de Kok, R. Land use and land cover map of water catchments areas in south poland, based on geobia multi stage approach. South East. Eur. J. Earth Obs. Geomat. 2014, 3, 293–297. [Google Scholar]

- Karl, J.W.; Maurer, B.A. Multivariate correlations between imagery and field measurements across scales: Comparing pixel aggregation and image segmentation. Landsc. Ecol. 2010, 25, 591–605. [Google Scholar] [CrossRef]

- Drăguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [PubMed]

- Clinton, N.; Holt, A.; Scarborough, J.; Yan, L.; Gong, P. Accuracy assessment measures for object-based image segmentation goodness. Photogramm. Eng. Remote Sens. 2010, 76, 289–299. [Google Scholar] [CrossRef]

- Weidner, U. Contribution to the assessment of segmentation quality for remote sensing applications. Int. Arch. Photogramm. Reomote Sens. 2008, 37, 479–484. [Google Scholar]

- Zhang, H.; Fritts, J.E.; Goldman, S.A. Image segmentation evaluation: A survey of unsupervised methods. Comput. Vis. Image Underst. 2008, 110, 260–280. [Google Scholar] [CrossRef]

- Myint, S.W.; Galletti, C.S.; Kaplan, S.; Kim, W.K. Object vs. pixel: A systematic evaluation in urban environments. Geocarto Int. 2013, 28, 657–678. [Google Scholar] [CrossRef]

- Yang, J.; Li, P.J.; He, Y.H. A multi-band approach to unsupervised scale parameter selection for multi-scale image segmentation. ISPRS J. Photogramm. Remote Sens. 2014, 94, 13–24. [Google Scholar] [CrossRef]

- Pal, N.R.; Pal, S.K. A review on image segmentation techniques. Pattern Recognit. 1993, 26, 1277–1294. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 2nd ed.; CRC/Taylor & Francis: Boca Raton, FL, USA, 2009. [Google Scholar]

- Radoux, J.; Bogaert, P.; Fasbender, D.; Defourny, P. Thematic accuracy assessment of geographic object-based image classification. Int. J. Geogr. Inf. Sci. 2011, 25, 895–911. [Google Scholar] [CrossRef]

- Stehman, S.V.; Wickham, J.D. Pixels, blocks of pixels, and polygons: Choosing a spatial unit for thematic accuracy assessment. Remote Sens. Environ. 2011, 115, 3044–3055. [Google Scholar] [CrossRef]

- Zhan, Q.; Molenaar, M.; Tempfli, K.; Shi, W. Quality assessment for geo-spatial objects derived from remotely sensed data. Int. J. Remote Sens. 2005, 26, 2953–2974. [Google Scholar] [CrossRef]

- Foody, G.M. Thematic map comparison: Evaluating the statistical significance of differences in classification accuracy. Photogramm. Eng. Remote Sens. 2004, 70, 627–633. [Google Scholar] [CrossRef]

- Janssen, L.L.F.; van der Wel, F.J.M. Accuracy assessment of satellite-derived land-cover data—A review. Photogramm. Eng. Remote Sens. 1994, 60, 419–426. [Google Scholar]

- Rees, D.G. Foundations of Statistics; Chapman & Hall: London, UK, 1987. [Google Scholar]

- Estoque, R.C.; Murayama, Y. Classification and change detection of built-up lands from Landsat-7 ETM+ and Landsat-8 OLI/TIRS imageries: A comparative assessment of various spectral indices. Ecol. Indic. 2015, 56, 205–217. [Google Scholar] [CrossRef]

- Pu, R.; Landry, S. A comparative analysis of high spatial resolution IKONOS and WorldView-2 imagery for mapping urban tree species. Remote Sens. Environ. 2012, 124, 516–533. [Google Scholar] [CrossRef]

- Bruzzone, L.; Carlin, L. A multilevel context-based system for classification of very high spatial resolution images. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2587–2600. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.M.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Zhong, Y.F.; Zhao, B.; Zhang, L.P. Multiagent object-based classifier for high spatial resolution imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 841–857. [Google Scholar] [CrossRef]

- Gholoobi, M.; Kumar, L. Using object-based hierarchical classification to extract land use land cover classes from high-resolution satellite imagery in a complex urban area. J. Appl. Remote Sens. 2015, 9, 521–522. [Google Scholar] [CrossRef]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Momeni, R.; Aplin, P.; Boyd, D.S. Mapping Complex Urban Land Cover from Spaceborne Imagery: The Influence of Spatial Resolution, Spectral Band Set and Classification Approach. Remote Sens. 2016, 8, 88. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8020088

Momeni R, Aplin P, Boyd DS. Mapping Complex Urban Land Cover from Spaceborne Imagery: The Influence of Spatial Resolution, Spectral Band Set and Classification Approach. Remote Sensing. 2016; 8(2):88. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8020088

Chicago/Turabian StyleMomeni, Rahman, Paul Aplin, and Doreen S. Boyd. 2016. "Mapping Complex Urban Land Cover from Spaceborne Imagery: The Influence of Spatial Resolution, Spectral Band Set and Classification Approach" Remote Sensing 8, no. 2: 88. https://0-doi-org.brum.beds.ac.uk/10.3390/rs8020088