1. Introduction

Leaf area index (LAI) is a key parameter that determines the photosynthesis, respiration, and transpiration of vegetation [

1,

2]. Real-time monitoring of the LAI for crops can not only help to obtain the status of health and nutrients, but also provide effective technical support in fertilizer application and water management [

3].

Remote sensing (RS) techniques are widely used in agriculture and agronomy [

4]. The common method of monitoring crops LAI is to use some data (vegetation index, degree of coverage, and so on) acquired by remote sensing sensors on ground-based [

5], satellite or airborne [

6,

7] platforms. However, the ground platform is used at individual locations and thus has low efficiency for wall-to-wall mapping; satellite data are often difficult in meeting the requirements of spatial and temporal resolutions [

8,

9]; manned airborne campaigns can acquire high spatial resolution data over relatively large areas, but they are costly. Unmanned aviation vehicles (UAV

S) have emerged in the last decades to overcome the deficiencies of the above platforms, especially for precision agriculture, because UAV can acquire data with high temporal frequency and spatial resolutions at a low cost [

8,

9,

10]. A recent study [

11] compared three types of data from the ground-based ASD Field Spec Pro spectrometer (Analytical Spectral Devices, Boulder, CO, USA), UAVs mounted ADC-Lite multi-spectral sensor, and GaoFen-1 in retrieving soybean LAI. It found that UAVs mostly has the same high accuracy with the ASD hyperspectral spectroradiometer.

Due to the above advantages, UAVs has been used in an increasing number of studies to estimate LAI for vegetation, mainly through the vegetation indices (VIs) derived from the reflectance of RGB digital cameras attached to the UAVs [

11,

12]. For example, Chianucci [

12] calculated the VI

S at the red, green and blue bands from the UAV true color digital image to estimate the beech forest LAI. Despite those efforts, the accuracy of predicting LAI was not high [

11,

12]. This could be attributed to the consumer-grade digital cameras used in these studies. These cameras normally capture true-color imagery with three bands (blue, green, and red) in the visible spectrum range. However, considerable research has shown that the vegetation reflectance in the NIR region is more strongly influenced by LAI than that in the visible region [

13]. Therefore, a few studies have attempted to develop sensors that substitute one of the blue, green, or red bands with a NIR band. For example, Hunt et al. [

14] acquired NIR-green-blue digital photographs from unmanned aircraft, and then proposed the GNDVI (Green Normalized Difference Vegetation Index) on the green and NIR channels for monitoring crop LAI. However, their sensor had just three bands.

Some studies attempted to increase the number of bands by integrating several cameras in one platform, which, however, can pose challenges in image preprocessing. For example, Lelong et al. [

15] adapted filters on commercially-available digital cameras (Canon EOS 350D and Sony DSC-F828) to design a new four-spectral-bands sensor (blue, green, red, and NIR), and they acquired the better prediction with RMSE = 0.57 and R

2 = 0.82. However, they reported that the data pre-processing was quite complex, and the data quality needs to be improved.

These studies mostly examined only the NIR band at the high reflectance “plateau” (around 720–850 nm) and developed corresponding VIs for estimating LAI. However, other NIR bands, especially the red-edge bands, could also be highly useful for predicting LAI [

13]. An urgent need exists to test the ability of the UAVs with sensors of more bands, especially with red edge and NIR bands, to estimate LAI. Only a few studies have used UAV multispectral imagery that include red edge and NIR bands for applications such as the seed management [

9], olive disease monitoring [

10], pasture variations identification [

16], and conifer forest carotenoid content estimation [

17]. Furthermore, UAVs is a promising remote sensing platform that gained more and more attention for crops. For example, Jin et al. [

18] estimate wheat plant density from UAV RGB images. Zhou et al. [

19] predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery.

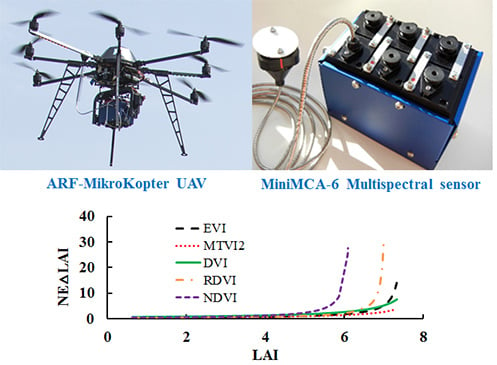

The LAI of crops at the mature stage can reach as high as 7, and the LAI level near which optical remote sensing imagery usually have the saturation problem [

19]. Another research gap is that some previous studies in UAV-based estimation of crop LAI often focused on a few growth stages. For example, Hunt et al. [

14] only acquired the image of winter wheat on 4th May at the flowering stage. To the best of our knowledge, except Lelong [

15] and Potgieter [

20], no studies have used UAVs to estimate crops LAI across the whole critical growth period, which can span a large LAI range. In addition, most of the researchers often estimate wheat LAI using normalized differential vegetation index (NDVI). It is well-known that NDVI is one of the most extensively applied VIs for estimating LAI, but merely effective at relatively low LAI values [

21]. Later, many studies proposed some effective VI

S to estimate LAI, e.g., the enhanced vegetation index (EVI) [

22], the modified triangular vegetation index (MTVI

2) [

23], the (optimized) soil adjusted vegetation index (SAVI and OSAVI) [

24,

25], but these VIs were mostly developed using satellite or airborne sensors. In addition, it is very essential when aiming to use an empirically-optimized VIs model to estimate growth parameters such as LAI using UAV images. There is an urgent need to assess the performance of commonly used VIs from UAV narrow multispectral sensor for estimating crop LAI across all different growth stages.

Wheat is the main cereal crop in the world. The number of large wheat family farms is rapidly increasing in China, which need the accuracy and frequent monitoring of growth status to guide the management of fertilizer and water [

26]. UAV, due to its low cost, high efficiency, flexible altitude and on-demand adjustment of the testing time, has certainly evolved as the appropriate tool in modern family farms [

27].

Our study has the following objectives: (1) to quantify the relationship between wheat LAI and various VIs from the narrowband multispectral imagery; (2) to infer the most powerful VIs over the wide range of growth conditions; (3) to examine the sensitivity of the VIs to LAI at the middle to high levels; (4) to evaluate the generality of VIs for LAI estimation when using independent test dataset with different cultivars, densities, and nitrogen rates during the critical growth period, which would provide the potential technical support for effectively monitoring crop growth in the management and tactics of decision-Nitrogen on the farm scale.

4. Discussions

4.1. Performance of Diverse VIs on Estimating Wheat LAI

In this study, 10 commonly used VI

S (RVI, NDVI, MTVI

2, SAVI, GBNDVI, RDVI, GNDVI, MSR, EVI, and DVI) for estimating LAI acquired from the narrowband multi-spectral imagery at five critical growth stages were analyzed systematically in wheat, and the optimal VI

S with the best performance was determined as MTVI

2 on carefully considering the performance of the higher accuracy, stability and sensitivity, which was consistent with Smith [

43].

MTVI

2 was originally constructed by three wavebands (800 nm, 550 nm, and 670 nm) [

23]. In this paper, we used 800 nm, 550 nm and 700 nm to calculate the value. 700 nm and 800 nm were located in the red edge region and the near-infrared range, respectively. Previous studies showed that the red edge wavebands index (680 nm–760 nm) were sensitive to the LAI and chlorophyll [

13,

44,

45,

46,

47], and the near-infrared short-wave (780 nm–1100 nm) was sensitive to the structure and water, which can deeply explore the canopy under higher biomass status [

48,

49]. Therefore, that was why MTVI

2 had strong performance in this paper.

Other VIs, such as RDVI (800, 700), DVI (800, 700), NDVI (800,700), GNDVI (800, 550), RVI (800, 700), SAVI (800, 700), MSR (800, 700), EVI (490, 700, 800), and GBNDVI (490, 550, 800), also had better performance, but some of these had the limitation of saturation under the moderate-to-high LAI levels as often found in high-yielding wheat crops.

4.2. Saturation of VIs under Varied LAI Leves in Wheat

Saturation of VIs is one of the major limitations in estimating the biophysical parameters on the linear regression. Some previous studies demonstrated that NDVI was accurate and robust in LAI estimation for various vegetation types; however, NDVI is limited by the low accuracy related to saturation occurring in relatively dense canopies (LAI > 2 m

2 m

−2) [

50,

51]. Smith, et al. [

43] also showed that NDVI will demonstrate saturation status when the LAI reaches 3~4. And, the NDVI-derived model was underestimated during the grain filling period. Later, NDVI was proven to monitor the growth status on the canopy imagery by the UAVs, but serious saturation phenomenon appeared [

14,

15]. Hunt [

52] also found that TGI (670, 480, 550) was not sensitive to changes in LAI > 2.0. Recently, a new index mNDSI (ρ940, 0.8ρ950, ρ730) which was more sensitive under the LAI conditions from 2 to 8 m

2 m

−2 was proposed from the author’s lab [

51]. This paper did not specifically solve the saturation issue, but we fully assessed the saturation of those selected VI

S, and the result showed that the new model on MTVI

2 had higher sensitivity under different LAI ranges. What is more, the model could be applied in the LAI range of 2 to 7 m

2 m

−2, which would greatly enlarge the range of application compared to previous models.

4.3. Effect of LAI Estimation on the Application of the Methodology

The leaf area index is an important descriptor of many biological and physical processes of vegetation. Most of the currently available ground-based methods to estimate the LAI include either direct contact destructive sampling and point contact sampling, or indirect light transmittance (2000 Plant Canopy Analyzer) or spectral reflectance (Analytical Spectral Devices) methods. They are difficult to move from one site to another with a small-extent mapping capability. On the other hand, it appeared that satellite sensors did not meet the requirements in increasing image temporal frequency and spatial resolution for agricultural application like crop monitoring. However, remote sensing sensors placed on unmanned aerial vehicles represent a promising option to fill this gap, providing low-cost and high efficiency approaches to meet the critical requirements of spatial, spectral, and temporal resolutions.

Fortunately, in this research, we found that the LAI model with MTVI2 has better accuracy with higher efficiency and lower error and is sensitive under various LAI values (from 2 to 7). Developing simple but efficient methods to monitor vegetation across a wide range of LAI is urgently needed for precision agriculture in China, where most of the field is with high LAI. In recent years, the application of nitrogen fertilizer increase year by year to meet the need of high production, which brings out the larger LAI than before. Therefore, the monitoring of LAI under medium and high level is important in practical production. In addition, the high cost of the equipment used here may be afforded by the owners of larger farms, or farmers associations that can share the benefits and also the costs, which is also important in China, since the farmer are not rich.

5. Conclusions

In this study, the major findings show that MTVI

2 (800, 700, 550) has the best performance in monitoring wheat LAI during the five critical growth stages, and the new developed LAI model demonstrated higher stability in the sub-strategies (including varied growth status) which could broaden the applied range (the maximum LAI reaches 7), when compared to the existing LAI model. The map of spatial distribution represents the dynamic change of wheat LAI, which shows relevant information for fertilizer practices. In summary, the UAVs technology is feasible in the monitoring of crop LAI for higher nitrogen nutrient fields at farm-scale in modern China. Accurate monitoring of wheat LAI is not only important in acquiring the growth status, but also provides crucial technical support to manage fertilizer applications for family farms. Previous studies have shown that critical N concentration curve on LAI can be an effective approach to diagnose the nutrient state and optimize N management in precision agriculture [

53,

54].

In practical use, a farm with the area of 5–10 ha could be fully covered with only 6–12 images at the flight altitude of 150 m. and the time will last an hour for the flight and pre-processing of imagery, which is high efficiency and low cost. However, there is still some work to be done to make the technology easy and simple, before we guide the addressing fertilization. It is still difficult to apply this technique to calculate the LAI for a general farmer, since not all the farmers can operate the UAV and pre-process image (vignette reduction, lens distortion correction, band registration, radiometric calibration and background removal), only if we could train them. So, we should develop a simple and easy LAI estimation software system on UAVS in the future research. In addition, we should consider research on carrying hyperspectral sensors on UAVs to obtain important information, fully taking advantage of the textural information to enhance the accuracy, stability and universality of the model.